Collecting events from Microsoft Azure Event Hub

This option is available from version v22.45 onwards

TeskaLabs LogMan.io Collector can collect events from Microsoft Azure Event Hub through a native client or Kafka. The events stored in Azure Event Hub may contain any data encoded in bytes, such as logs about various user, admin, system, device, and policy actions.

Microsoft Azure Event Hub Setting

The following credentials need to be obtained for LogMan.io Collector to read the events: connection string, event hub name and consumer group.

Obtain connection string from Microsoft Azure Event Hub

1) Sign in to the Azure portal with admin privileges to the respective Azure Event Hubs Namespace.

The Azure Event Hubs Namespace is available in the Resources section.

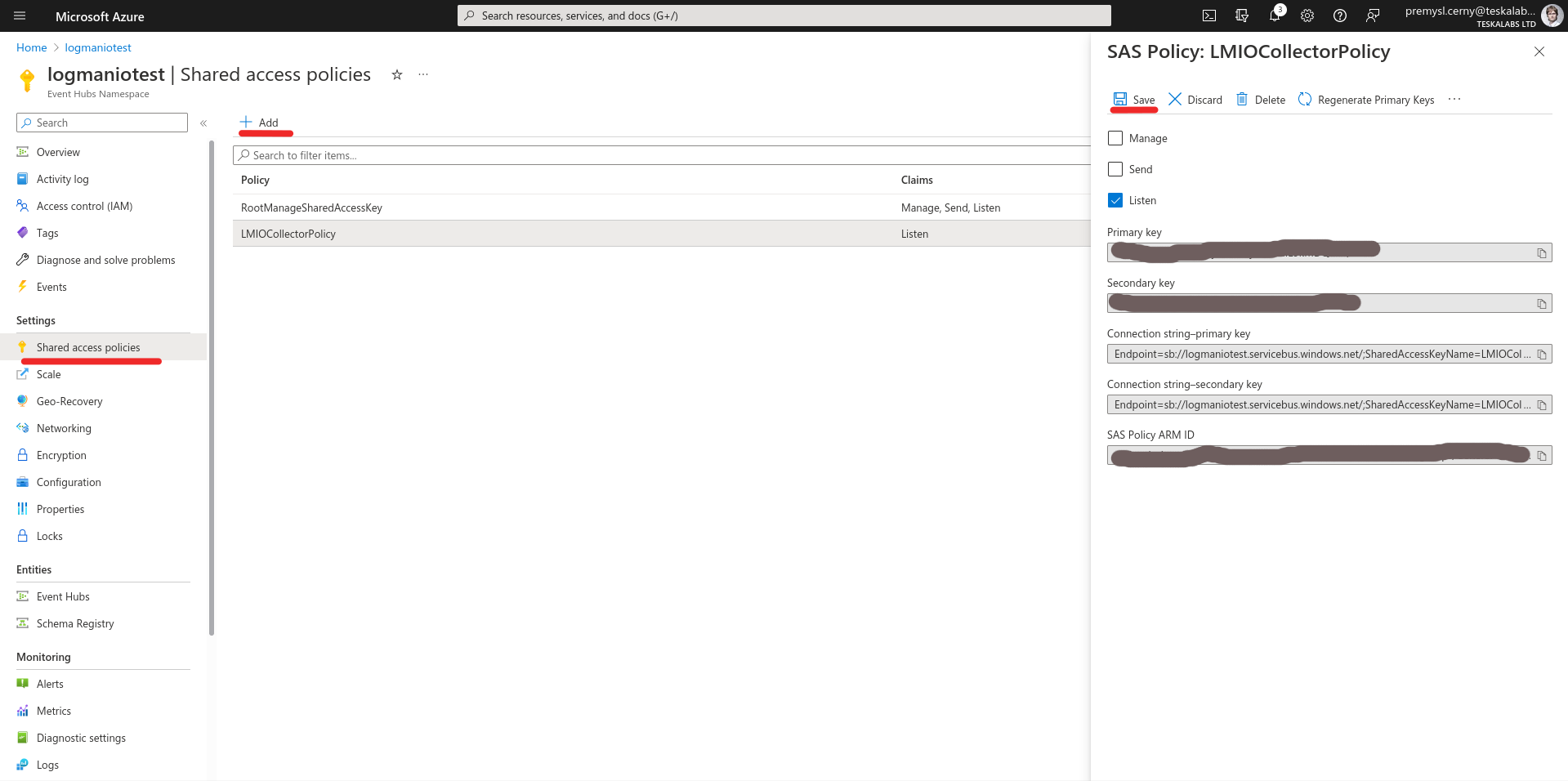

2) In the selected Azure Event Hubs Namespace, click on Shared access policies in the Settings section in the left menu.

Click on Add button, enter the name of the policy (the recommended name is: LogMan.io Collector), and a right popup window about the policy details should appear.

3) In the popup window, select the Listen option to allow the policy to read from event hubs associated with the given namespace.

See the following picture.

4) Copy the Connection string-primary key and click on Save.

The policy should be visible in the table in the middle of the screen.

The connection string starts with Endpoint=sb:// prefix.

Obtain consumer group

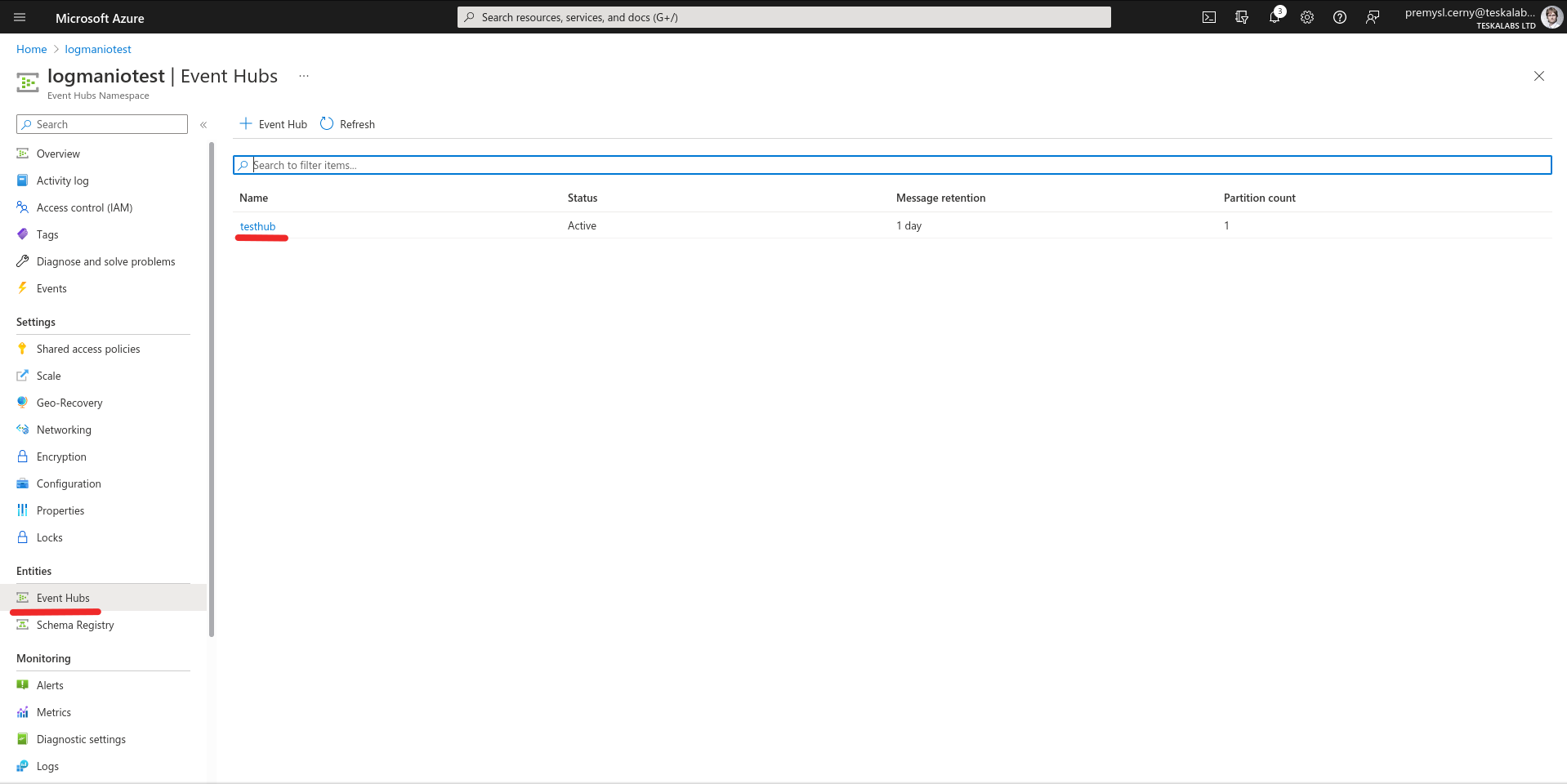

5) In the Azure Event Hubs Namespace, select Event Hubs option from the left menu.

6) Click on the event hub that contains events to be collected.

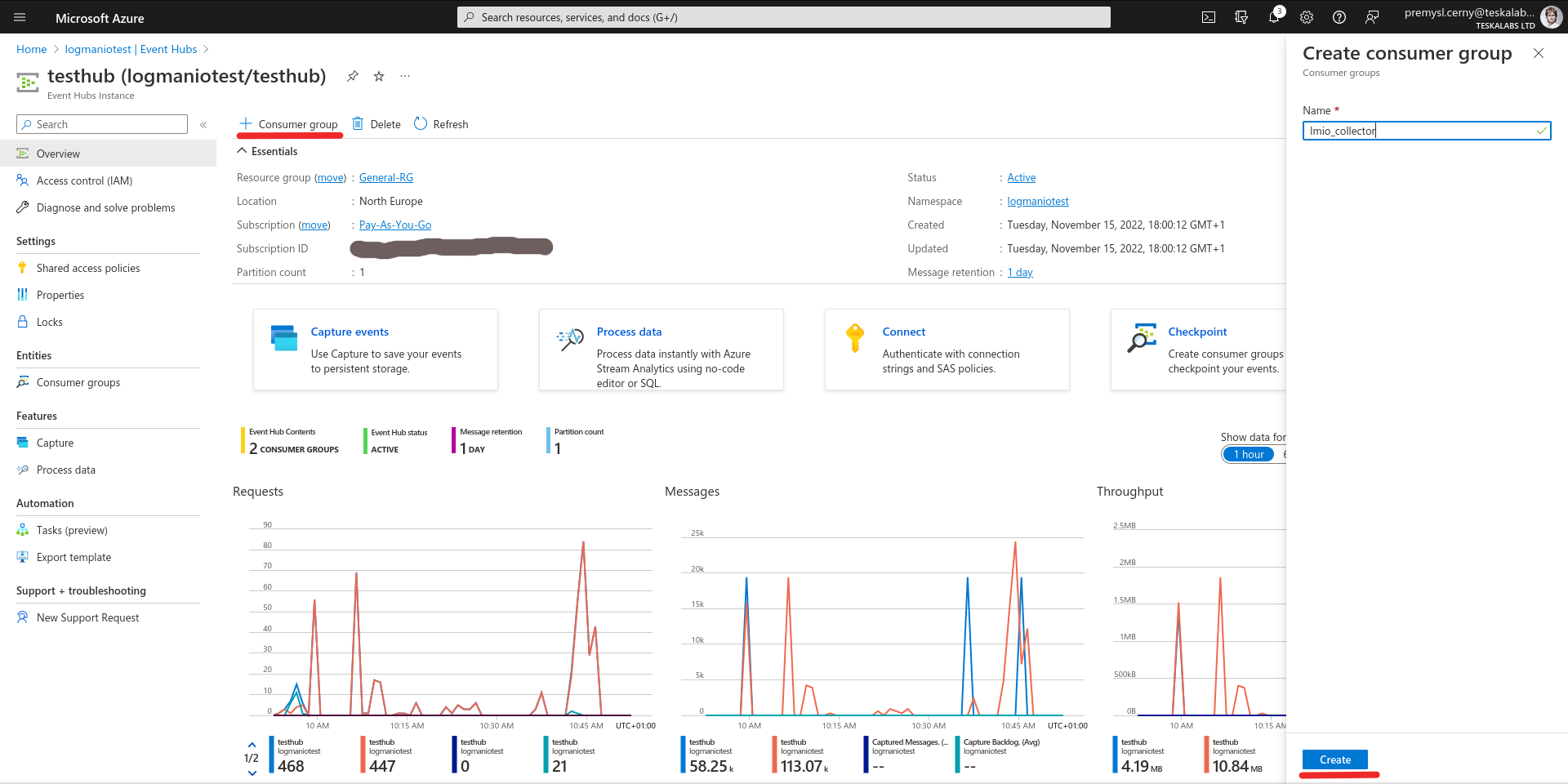

7) When in the event hub, click on the + Consumer group button in the middle of the screen.

8) In the right popup window, enter the name of the consumer group (the recommended value is lmio_collector) and click on Create button.

9) Repeat this procedure for all event hubs meant to be consumed.

10) Write down the consumer group's name and all event hubs for the eventual LogMan.io Collector configuration.

LogMan.io Collector Input setup

Azure Event Hub Input

The input named input:AzureEventHub: needs to be provided in the LogMan.io Collector YAML configuration:

input:AzureEventHub:AzureEventHub:

connection_string: <CONNECTION_STRING>

eventhub_name: <EVENT_HUB_NAME>

consumer_group: <CONSUMER_GROUP>

output: <OUTPUT>

<CONNECTION_STRING>, <EVENT_HUB_NAME> and <CONSUMER_GROUP> are provided through the guide above

The following meta options are available for the parser: azure_event_hub_offset, azure_event_hub_sequence_number, azure_event_hub_enqueued_time, azure_event_hub_partition_id, azure_event_hub_consumer_group and azure_event_hub_eventhub_name.

The output is events as a byte stream, similar to Kafka input.

Azure Monitor Through Event Hub Input

The Azure Monitor Through Event Hub Input loads events from Azure Event Hub, loads the Azure Monitor JSON Log and breaks individual records to log lines, that are then sent to the defined output.

The input named input:AzureMonitorEventHub: needs to be provided in the LogMan.io Collector YAML configuration:

input:AzureMonitorEventHub:AzureMonitorEventHub:

connection_string: <CONNECTION_STRING>

eventhub_name: <EVENT_HUB_NAME>

consumer_group: <CONSUMER_GROUP>

encoding: # default: utf-8

output: <OUTPUT>

<CONNECTION_STRING>, <EVENT_HUB_NAME> and <CONSUMER_GROUP> are provided through the guide above

The following meta options are available for the parser: azure_event_hub_offset, azure_event_hub_sequence_number, azure_event_hub_enqueued_time, azure_event_hub_partition_id, azure_event_hub_consumer_group and azure_event_hub_eventhub_name.

The output is events as a byte stream, similar to Kafka input.

Alternative: Kafka Input

Azure Event Hub also provides (excluding basic tier users) a Kafka interface, so standard LogMan.io Collector Kafka input can be used.

There are multiple authentication options in Kafka, including oauth etc.

However, for the purposes of the documentation and reuse of the connection string, the plain SASL authentication using the connection string from the guide above is preferred.

input:Kafka:KafkaInput:

bootstrap_servers: <NAMESPACE>.servicebus.windows.net:9093

topic: <EVENT_HUB_NAME>

group_id: <CONSUMER_GROUP>

security.protocol: SASL_SSL

sasl.mechanisms: PLAIN

sasl.username: "$ConnectionString"

sasl.password: <CONNECTION_STRING>

output: <OUTPUT>

<CONNECTION_STRING>, <EVENT_HUB_NAME> and <CONSUMER_GROUP> are provided through the guide above, <NAMESPACE> in the name of the Azure Event Hub resource (also mentioned in the guide above).

The following meta options are available for the parser: kafka_key, kafka_headers, _kafka_topic, _kafka_partition and _kafka_offset.

The output is events as a byte stream.