ASAB Maestro Model¶

Model is a YAML file or multiple of them describing wanted layout of the cluster. It is the main point of interaction between the user and ASAB Maestro. Model is a place where to customize LogMan.io installation.

Model file(s)¶

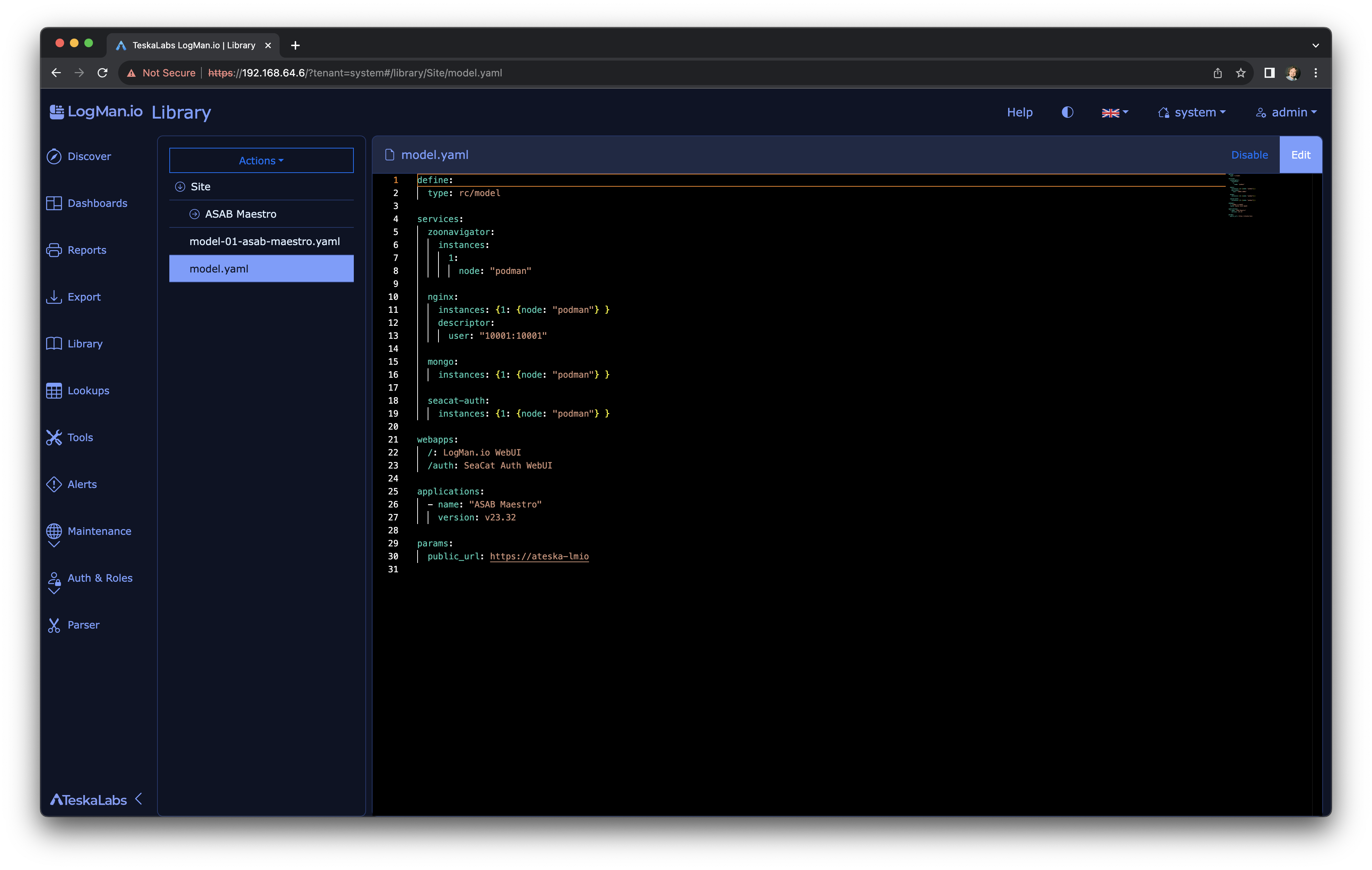

Model files are stored in the library at /Site/ folder.

The user-level model file is /Site/model.yaml.

The administrator edits this file manually, i.e. in the Library.

Multiple model files can exist. Especially managed model pieces are separated into specific files. All model files are merged into a single model data structure when the model is being applied.

Warning

Don't edit model files labeled as automatically generated.

Structure of the model¶

Example of the /Site/model.yaml:

define:

type: rc/model

services:

nginx:

instances:

1: {node: "node1"}

2: {node: "node2"}

2: {node: "node3"}

mongo:

- {node: "node1"}

- {node: "node2"}

- {node: "node3"}

myservice:

instances:

id1: {node: "node1"}

webapps:

/: My Web application

/auth: SeaCat Auth WebUI

/influxdb: InfluxDB UI

applications:

- name: "ASAB Maestro"

version: v23.32

- name: "My Application"

version: v1.0

params:

PUBLIC_URL: https://ateska-lmio

Section define¶

define:

type: rc/model

It specifies the type of this YAML file by stating type: rc/model.

Section services¶

This section lists all services in the cluster. In the example above, services are "nginx", "mongo" and "myservice".

Each service name must correspond with the respective descriptor in the Library.

The services section is prescribed as follows:

services:

<service_id>:

instances:

<instance_no>:

node: <node_id>

<instance-level overrides>

...

<service-level overrides>

Add new instance¶

The instances section of the service entry in services must specify on which node to run each instance.

This is a canonical, fully expanded form:

myservice:

instances:

1:

node: node1

2:

node: node2

3:

node: node2

Service myservice is scheduled to run in three instances (instance number 1, 2 and 3) at nodes node1 and node2.

Following forms are available for brevity:

myservice:

instances: {1: node1, 2: node2, 3: node2}

myservice:

instances: ["node1", "node2", "node2"]

The last example defines only one instance (of the number 1) of the service myservice that will be scheduled to the node node1:

myservice:

instances: node1

Removed instances¶

Some services need a fixed instance number for a whole lifecycle of the cluster, especially if some instances are removed.

Renaming and moving instances

Whenever renaming or moving the instances from one node to another, keep in mind there's no reference between "old" and "new" instance. It means that one instance is being deleted and second one created. If you move an instance of a service from one node to another, be aware that data stored on that node and managed or used by the service are not moved.

ZooKeeper

The instance number in ZooKeeper service is used to by the ZooKeeper technology to identify the instance within the cluster. Thus, changing instance number means to remove one ZooKeeper node from the cluster and add a new one.

The removed instance is number two:

myservice:

instances:

1: {node: "node1"}

# There used to be another instance here but it is removed now

3: {node: "node2"}

In the reduced form, null has to be used:

myservice: ["node1", null, "node2"]

Overriding the descriptor values¶

To override values from the descriptor, you can enter these values on marks <instance-level overrides> or <service-level overrides> respectively.

In the following example, number of cpu is set to 2 at Docker Compose and also the asab section from the descriptor of the asab-governator is overriden on the instance level:

services:

...

asab-governator:

instances:

1:

node: node1

descriptor:

cpus: 2

asab:

config:

remote_control:

url:

- http://nodeX:8891/rc

The same override, but on the service level:

services:

...

asab-governator:

instances: [node1, node2]

descriptor:

cpus: 2

asab:

config:

remote_control:

url:

- http://nodeX:8891/rc

Overriding versions¶

To override version that is by default set in the version file, use "version" keyword in the model.

In the example below the version of instance asab-governator-1 will be set to v24.36. The version in the version file will be ignored.

services:

...

asab-governator:

instances:

- node1

version: v24.36

Section webapps¶

The webapps section describes what web applications to install into the cluster.

See the NGINX chapter for more details.

Section applications¶

The application section lists the applications from the Library to be included.

applications:

- name: <application name>

version: <application version>

...

The application lives in the Library in the /Site/<application name>/ folder.

The version is specified in the version file at /Site/<application name>/Versions/<application version>.yaml.

Multiple applications can be deployed together in the same cluster if there are multiple application entries in the applications section of the model.

Version file¶

Example of the version file

/Site/ASAB Maestro/Versions/v23.32.yaml:

define:

type: rc/version

product: ASAB Maestro

version: v23.32

versions:

zookeeper: '3.9'

nginx: '1.25.2'

mongo: '7.0.1'

asab-remote-control: latest

asab-governator: stable

asab-library: v23.15

asab-config: v23.31

seacat-auth: v23.37-beta

asab-iris: v23.31

Section params¶

This section contains key/value cluster-level (global) parametrization of the site.

Model-level extensions¶

Some technologies allows the model to specify extensions to their configuration.

Example of the NGINX model-level extension:

define:

type: rc/model

...

nginx:

https:

location /:

- gzip_static on

- alias /webroot/lmio-webui/dist

Multiple model files¶

Besides the user-level model file (/Site/model.yaml), you can find there also generated model files named based on this pattern: /Site/model-*.yaml.

Model files are merged into one big model just before processing by ASAB Remote Control.