Table of Contents

Introduction

TeskaLabs LogMan.io documentation¶

Welcome to TeskaLabs LogMan.io documentation.

TeskaLabs LogMan.io¶

TeskaLabs LogMan.io™️ is a cybersecurity software product for log collection, log aggregation, log storage and retention, real-time log analysis and prompt incident response for an IT infrastructure, collectively known as log management.

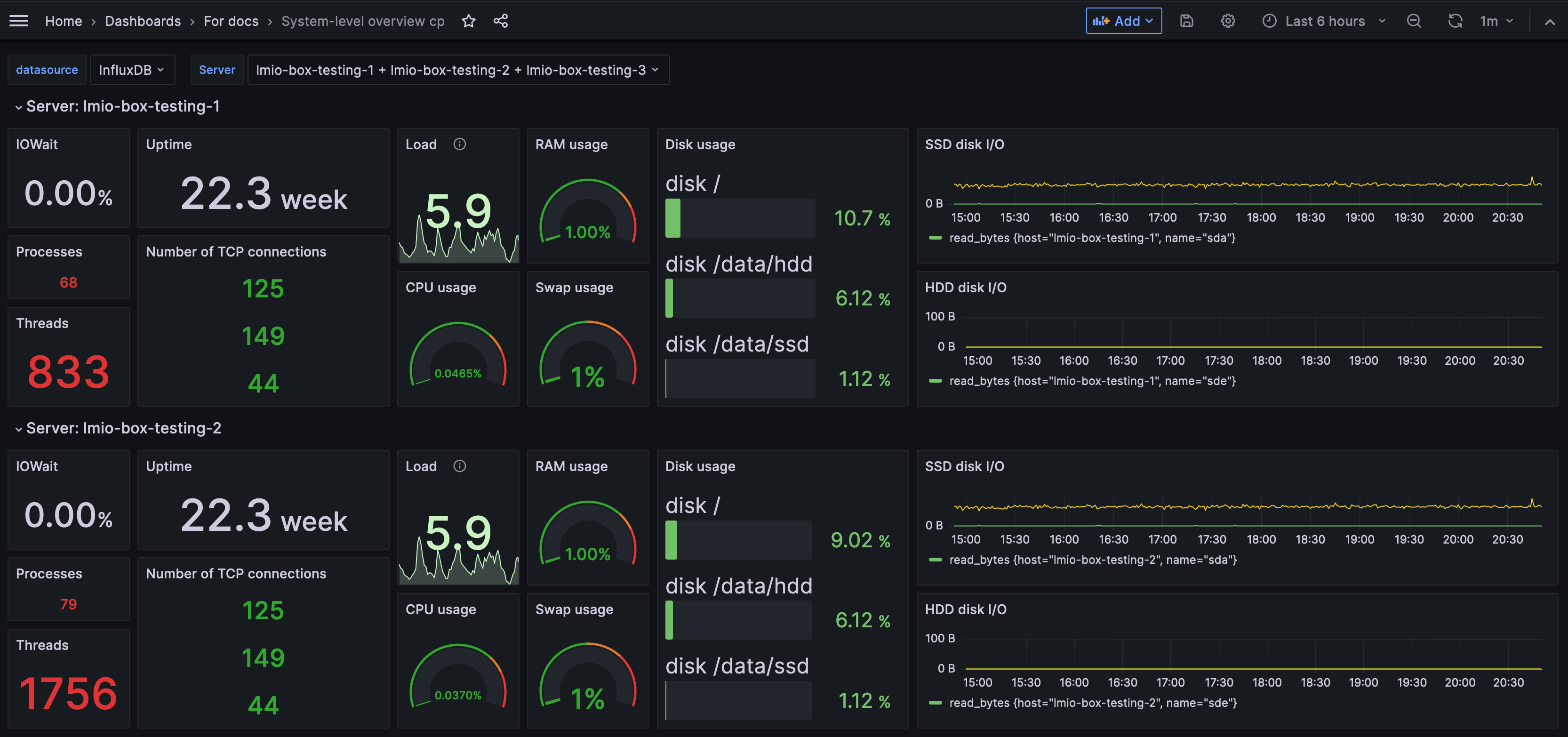

TeskaLabs LogMan.io consists of a central infrastructure and log collectors, that resides on monitored systems such as servers or network appliances. Log collectors collect various logs (operation system, applications, databases) and system metrics such as CPU usage, memory usage, disk space etc. Collected events are sent in real-time to central infrastructure for consolidation, orchestration and storage. Thanks to its real-time nature, LogMan.io provides alerts for anomalous situation in perspective of system operation (e.g. is disk space running low), availability (e.g. is the application running?), business (e.g. is number of transaction below normal?) or security (e.g. any unusual access to servers?).

TeskaLabs SIEM¶

TeskaLabs SIEM is a real-time Security Information and Event Managemet tool. TeskaLabs SIEM provides real-time analysis and correlations of security events and alerts processed by a TeskaLabs LogMan.io. We designed TeskaLabs SIEM to enhance cyber security posture and compliance with regulatory.

More components

TeskaLabs SIEM and TeskaLabs LogMan.io are standalone products. Thanks to its modular architecture, these products also include other TeskaLabs technologies:

- TeskaLabs SeaCat Auth for authentification, authorization including user roles and fine-grained access control.

- TeskaLabs SP-Lang is an expression language used on many places in the product.

Made with ❤️ by TeskaLabs

TeskaLabs LogMan.io™️ is a product of TeskaLabs.

Features¶

TeskaLabs LogMan.io is a real-time SIEM with log management.

- Multitenancy: a single instance of TeskaLabs LogMan.io can serve multiple tenants (customers, departments).

- Multiuser: TeskaLabs LogMan.io can be used by unlimmited number of users simultanously.

Technologies¶

Cryptography¶

- Transport layer: TLS 1.2, TLS 1.3 and better

- Symmetric cryptography: AES-128, AES-256, AES-512

- Asymmetric cryptography: RSA, ECC

- Hash methods: SHA-256, SHA-384, SHA-512

- MAC functions: HMAC

- HSM: PKCS#11 interface

Note

TeskaLabs LogMan.io uses only strong cryptography, it means that we use only these ciphers, hashes and other algorithms that are recongized as secure by cryptographic comunity and by organizations such as ENISA or NIST.

Supported Log Sources¶

TeskaLabs LogMan.io supports a variety of different technologies, which we have listed below.

Formats¶

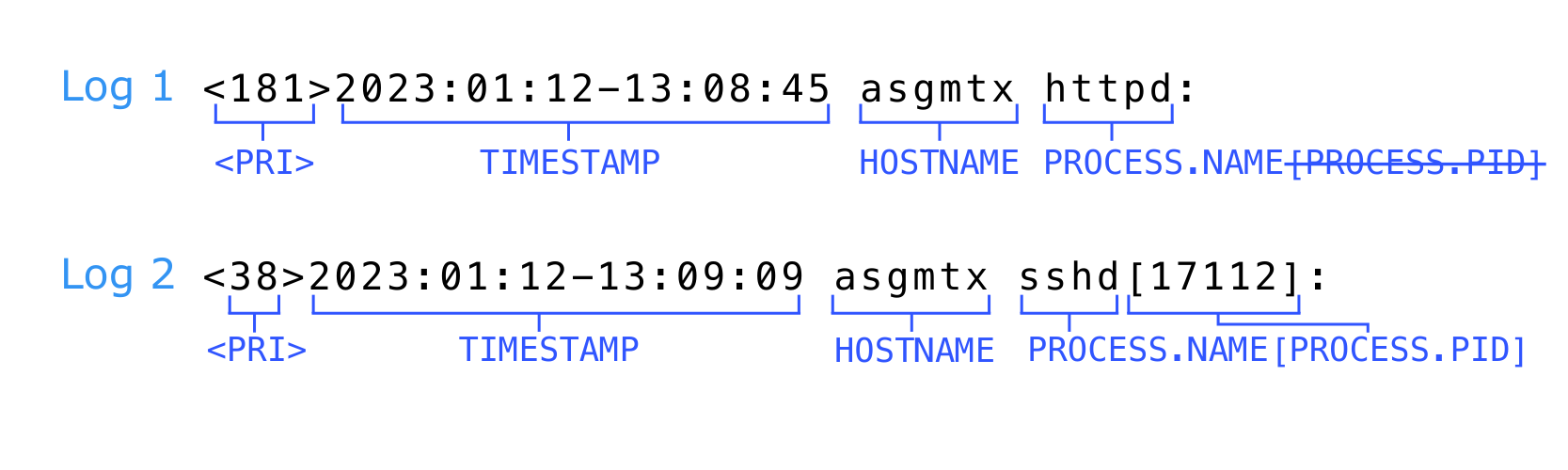

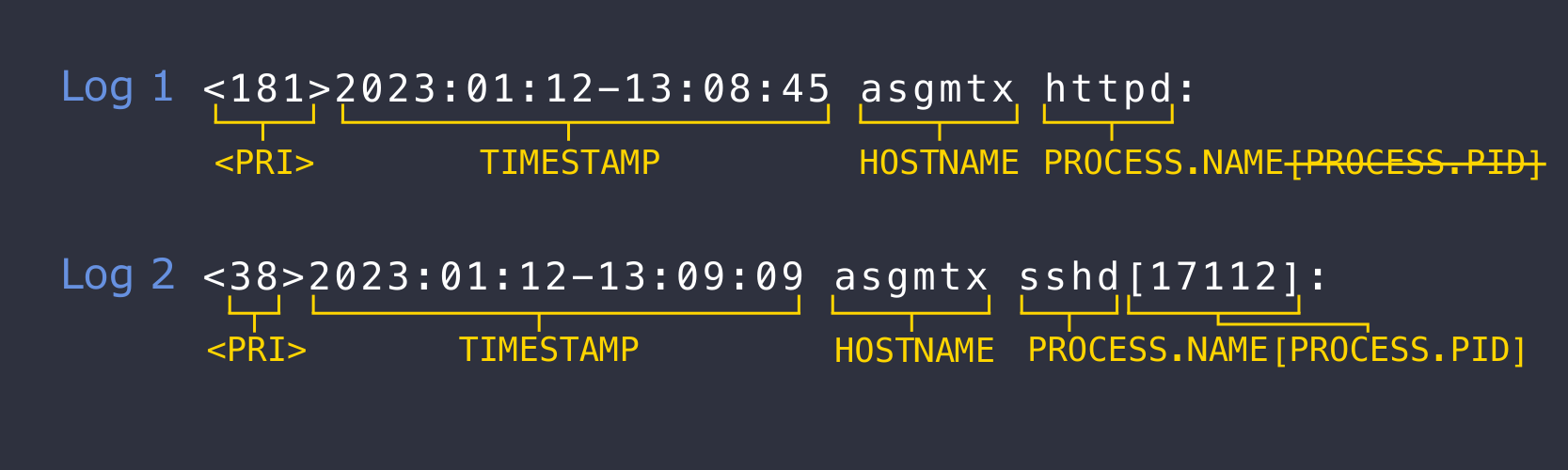

- Syslog RFC 5424 (IEFT)

- Syslog RFC 3164 (BSD)

- Syslog RFC 3195 (BEEP profile)

- Syslog RFC 6587 (Frames over TCP)

- Reliable Event Logging Protocol (REPL), including SSL

- Windows Event Log

- SNMP

- ArcSight CEF

- LEEF

- JSON

- XML

- YAML

- Avro

- Custom/raw log format

And many more.

Info

Syslog protocols can be transported over TCP, UDP and TLS/SSL.

Vendors and Products¶

Cisco¶

- Cisco Firepower Threat Defense (FTD)

- Cisco Adaptive Security Appliance (ASA)

- Cisco Identity Services Engine (ISE)

- Cisco Meraki (MX, MS, MR devices)

- Cisco Catalyst Switches

- Cisco IOS

- Cisco WLC

- Cisco ACS

- Cisco SMB

- Cisco UCS

- Cisco IronPort

- Cisco Nexus

- Cisco Routers

- Cisco VPN

- Cisco Umbrella

Palo Alto Networks¶

- Palo Alto Next-Generation Firewalls

- Palo Alto Panorama (Centralized Management)

- Palo Alto Traps (Endpoint Protection)

Fortinet¶

- FortiGate (Next-Generation Firewalls)

- FortiSwitch (Switches)

- FortiAnalyzer (Log Analytics)

- FortiMail (Email Security)

- FortiWeb (Web Application Firewall)

- FortiADC

- FortiDDos

- FortiSandbox

Juniper Networks¶

- Juniper SRX Series (Firewalls)

- Juniper MX Series (Routers)

- Juniper EX Series (Switches)

Check Point Software Technologies¶

- Check Point Security Gateways

- Check Point SandBlast (Threat Prevention)

- Check Point CloudGuard (Cloud Security)

Microsoft¶

- Microsoft Windows (Operating System)

- Microsoft Azure (Cloud Platform)

- Microsoft SQL Server (Database)

- Microsoft IIS (Web Server)

- Microsoft Office 365

- Microsoft Exchange

- Microsoft Sharepoint

Linux¶

- Ubuntu (Distribution)

- CentOS (Distribution)

- Debian (Distribution)

- Red Hat Enterprise Linux (Distribution)

- IPTables

- nftables

- Bash

- Cron

- Kernel (dmesg)

Oracle¶

- Oracle Database

- Oracle WebLogic Server (Application Server)

- Oracle Cloud

Amazon Web Services (AWS)¶

- Amazon EC2 (Virtual Servers)

- Amazon RDS (Database Service)

- AWS Lambda (Serverless Computing)

- Amazon S3 (Storage Service)

VMware¶

- VMware ESXi (Hypervisor)

- VMware vCenter Server (Management Platform)

F5 Networks¶

- F5 BIG-IP (Application Delivery Controllers)

- F5 Advanced Web Application Firewall (WAF)

Barracuda Networks¶

- Barracuda CloudGen Firewall

- Barracuda Web Application Firewall

- Barracuda Email Security Gateway

Sophos¶

- Sophos XG Firewall

- Sophos UTM (Unified Threat Management)

- Sophos Intercept X (Endpoint Protection)

Aruba Networks (HPE)¶

- Aruba Switches

- Aruba Wireless Access Points

- Aruba ClearPass (Network Access Control)

- Aruba Mobility Controller

HPE¶

- iLO

- IMC

- HPE StoreOnce

- HPE Primera Storage

- HPE 3PAR StoreServ

Trend Micro¶

- Trend Micro Deep Security

- Trend Micro Deep Discovery

- Trend Micro TippingPoint (Intrusion Prevention System)

- Trend Micro Endpoint Protection Manager

- Trend Micro Apex One

Fidelis¶

- Fidelis Elevate

Zscaler¶

- Zscaler Internet Access (Secure Web Gateway)

- Zscaler Private Access (Remote Access)

Akamai¶

- Akamai (Content Delivery Network and Security)

- Akamai Kona Site Defender (Web Application Firewall)

- Akamai Web Application Protector

Imperva¶

- Imperva Web Application Firewall (WAF)

- Imperva Database Security (Database Monitoring)

SonicWall¶

- SonicWall Next-Generation Firewalls

- SonicWall Email Security

- SonicWall Secure Mobile Access

WatchGuard Technologies¶

- WatchGuard Firebox (Firewalls)

- WatchGuard XTM (Unified Threat Management)

- WatchGuard Dimension (Network Security Visibility)

Apple¶

- macOS (Operating System)

Apache¶

- Apache Cassandra (Database)

- Apache HTTP Server

- Apache Kafka

- Apache Tomcat

- Apache Zookeeper

NGINX¶

- NGINX (Web Server and Reverse Proxy Server)

Docker¶

- Docker (Container Platform)

Kubernetes¶

- Kubernetes (Container Orchestration)

Atlassian¶

- Jira (Issue and Project Tracking)

- Confluence (Collaboration Software)

- Bitbucket (Code Collaboration and Version Control)

Cloudflare¶

- Cloudflare (Content Delivery Network and Security)

SAP¶

- SAP HANA (Database)

Balabit¶

- syslog-ng

Open-source¶

- PostgreSQL (Database)

- MySQL (Database)

- OpenSSH (Remote access)

- Dropbear SSH (Remote access)

- Jenkins (Continuous Integration and Continuous Delivery)

- rsyslog

- GenieACS

- Haproxy

- spamassasin

- FreeRadius

- Bind

- DHCP

- Postfix

- Squid Cache

- Zabbix

- FileZilla

IBM¶

- IBM Db2 (Database)

- IBM AIX (Operating System)

- IBM i (Operating System)

Brocade¶

- Brocade Switches

Google¶

- Google Cloud

- Pub/Sub & BigQuery

Elastic¶

- Logstash

- Filebeat

- Winlogbeat

- Auditbeat

- Metricbeat

- Packetbeat

- Heartbeat

- ... and beats from the community list

- ElasticSearch

Citrix¶

- Citrix Virtual Apps and Desktops (Virtualization)

- Citrix Hypervisor (Virtualization)

- Citrix ADC, NetScaler

- Citrix Gateway (Remote access)

- Citrix SD-WAN

- Citrix Endpoint Management (MDM, MAM)

Dell¶

- Dell EMC Isilon (network-attached storage)

- Dell PowerConnect Switches

- Dell W-Series (Access points)

- Dell iDRAC

- Dell Force10 Switches

FlowMon¶

- Flowmon Collector

- Flowmon Probe

- Flowmon ADS

- Flowmon FPI

- Flowmon APM

GreyCortex¶

- GreyCortex Mendel

Huawei¶

- Huawei Routers

- Huawei Switches

- Huawei Unified Security Gateway (USG)

Synology¶

- Synology NAS

- Synology SAN

- Synology NVR

- Synology Wi-Fi routers

Ubiquity¶

- UniFi

Avast¶

- Avast Antivirus

Kaspersky¶

- Kaspesky Endpoint Security

- Kaspesky Security Center

Kerio¶

- Kerio Connect

- Kerio Control

- Kerio Clear Web

Symantec¶

- Symantec Endpoint Protection Manager

- Symantec Messaging Gateway

ESET¶

- ESET Antivirus

- ESET Remote Administrator

AVG¶

- AVG Antivirus

Extreme Networks¶

- ExtremeXOS

IceWarp¶

- IceWarp Mail Server

Mikrotik¶

- Mikrotic Routers

- Mikrotik Switches

Pulse Secure¶

- Pulse Connect Secure SSL VPN

QNAP¶

- QNAP NAS

Safetica¶

- Safetica DLP

Veeam¶

- Veeam Backup and Restore

SuperMicro¶

- IPMI

Mongo¶

- MongoDB

YSoft¶

- SafeQ

Bitdefender¶

- Bitdefender GravityZone

- Bitdefender Network Traffic Security Analytics (NTSA)

- Bitdefender Advanced Threat Intelligence

Stapro¶

- Stapro FONS Akord

This list is not exhaustive, as there are many other vendors and products that can send logs to TeskaLabs LogMan.io using standard protocols such as Syslog. Please contact us if you seek for a specific technology to be integrated.

SQL log extraction¶

TeskaLabs LogMan.io can extract logs from various SQL databases using ODBC (Open Database Connectivity).

Among supported databases are:

- PostgreSQL

- Oracle Database

- IBM Db2

- MySQL

- SQLite

- MariaDB

- SAP HANA

- Sybase ASE

- Informix

- Teradata

- Amazon RDS (Relational Database Service)

- Google Cloud SQL

- Azure SQL Database

- Snowflake

Trademarks

All trademarks ortrade names mentioned or used are the property of their respective owners.

TeskaLabs LogMan.io Architecture¶

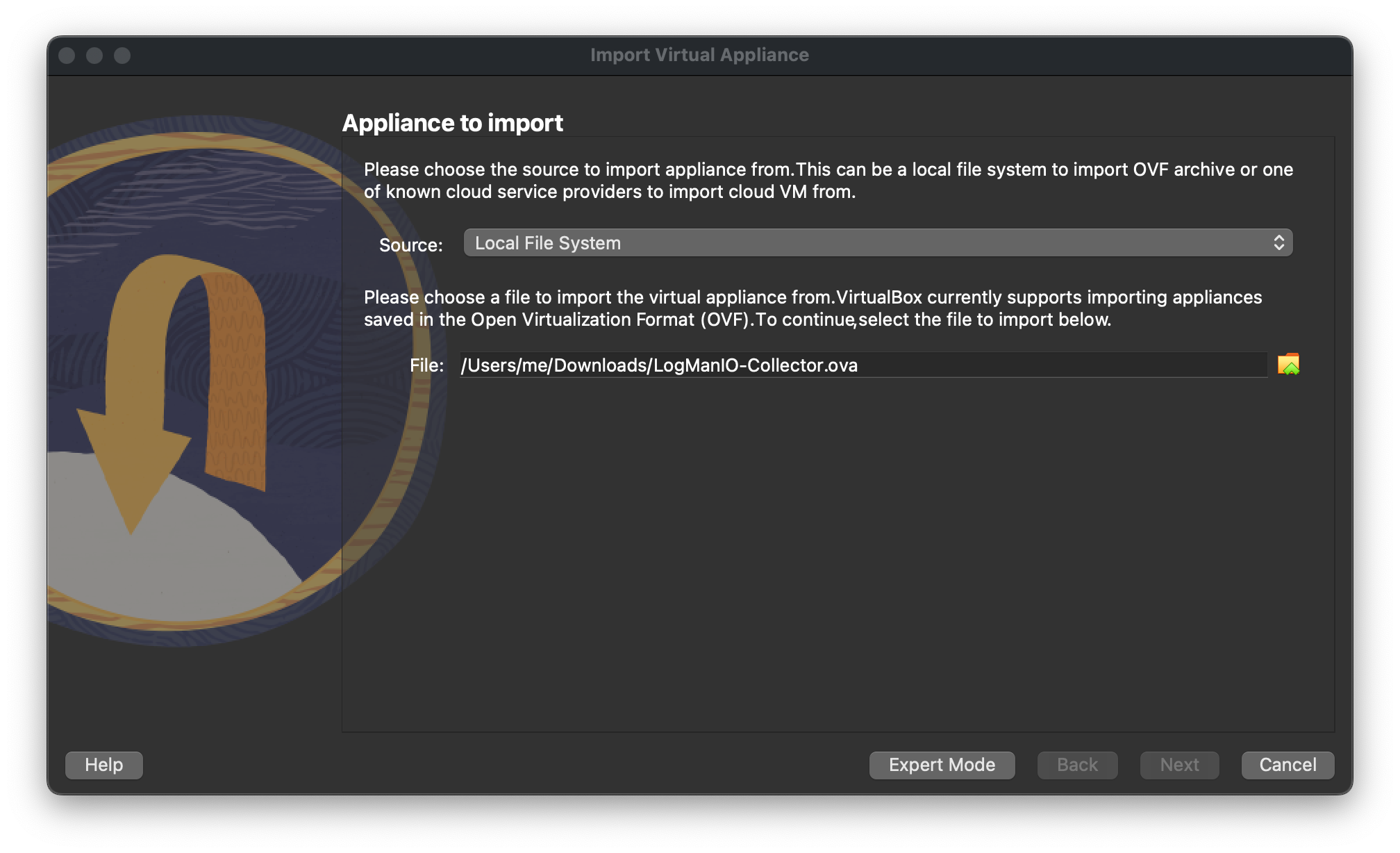

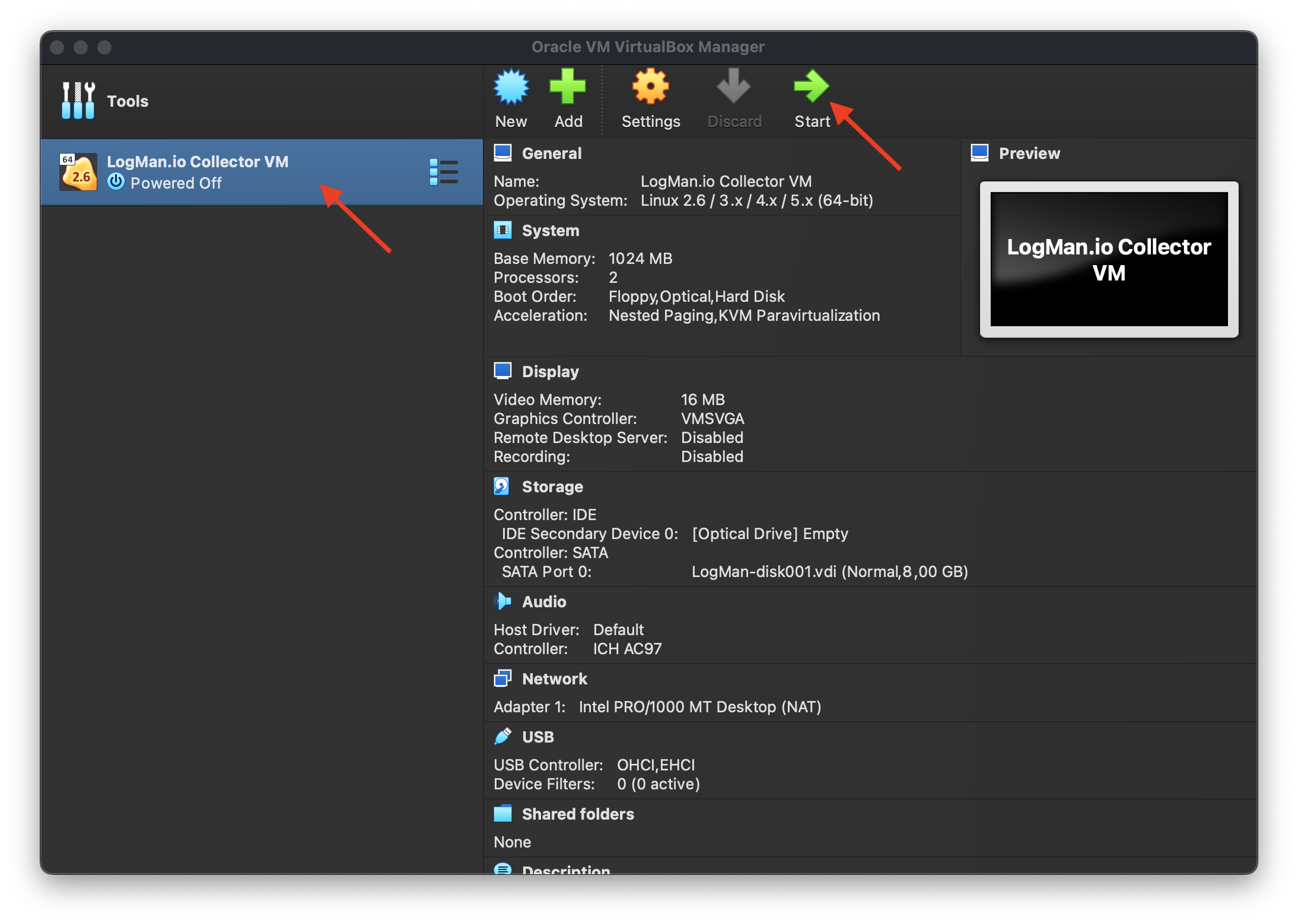

lmio-collector¶

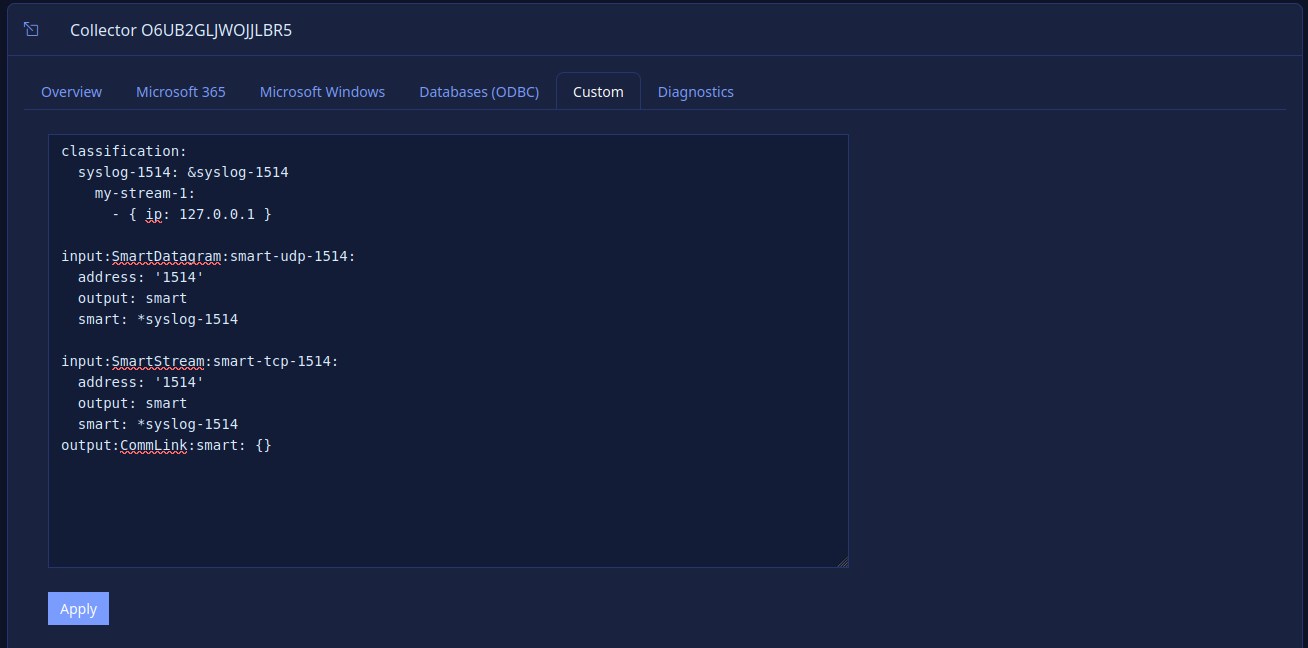

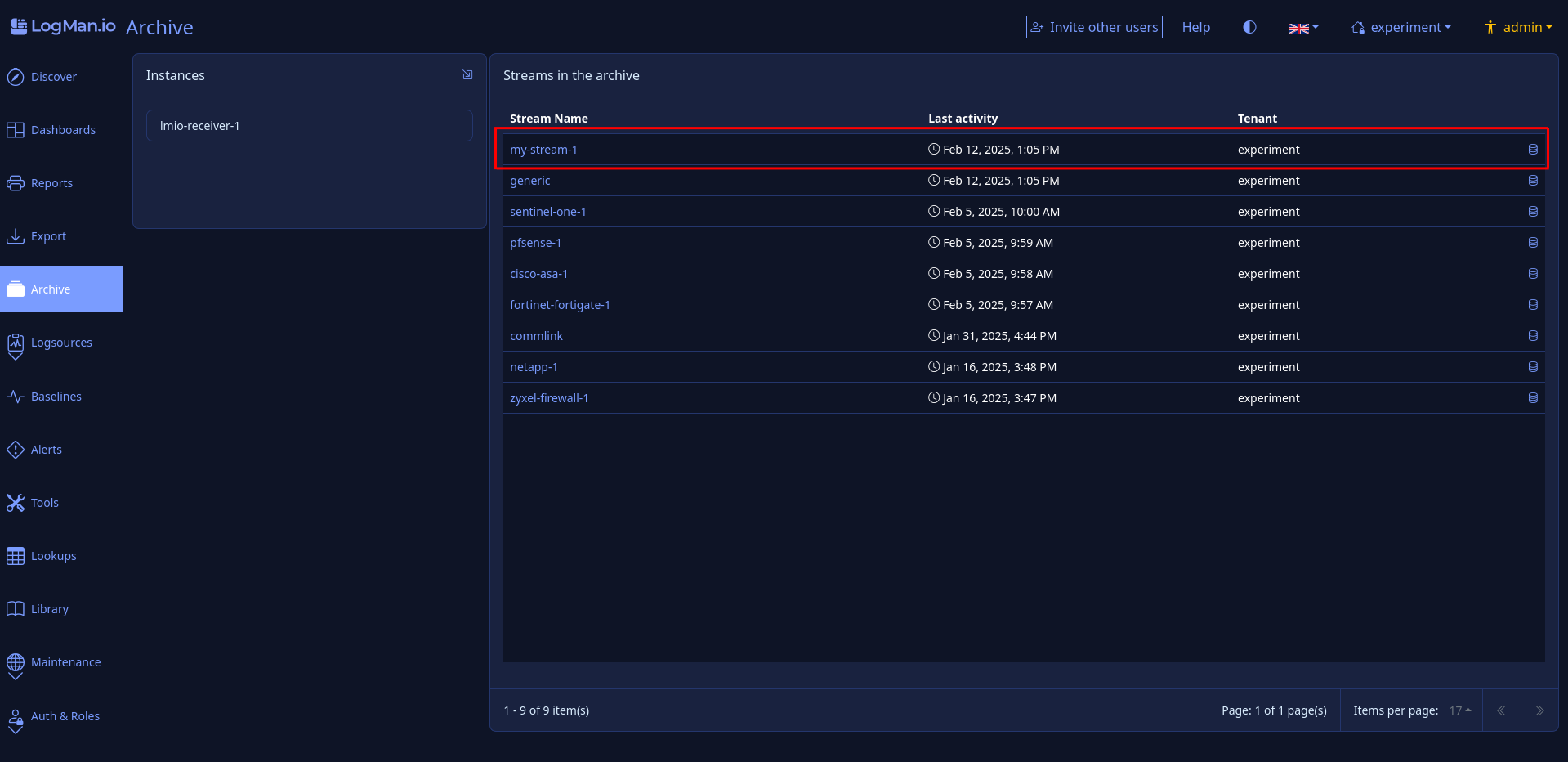

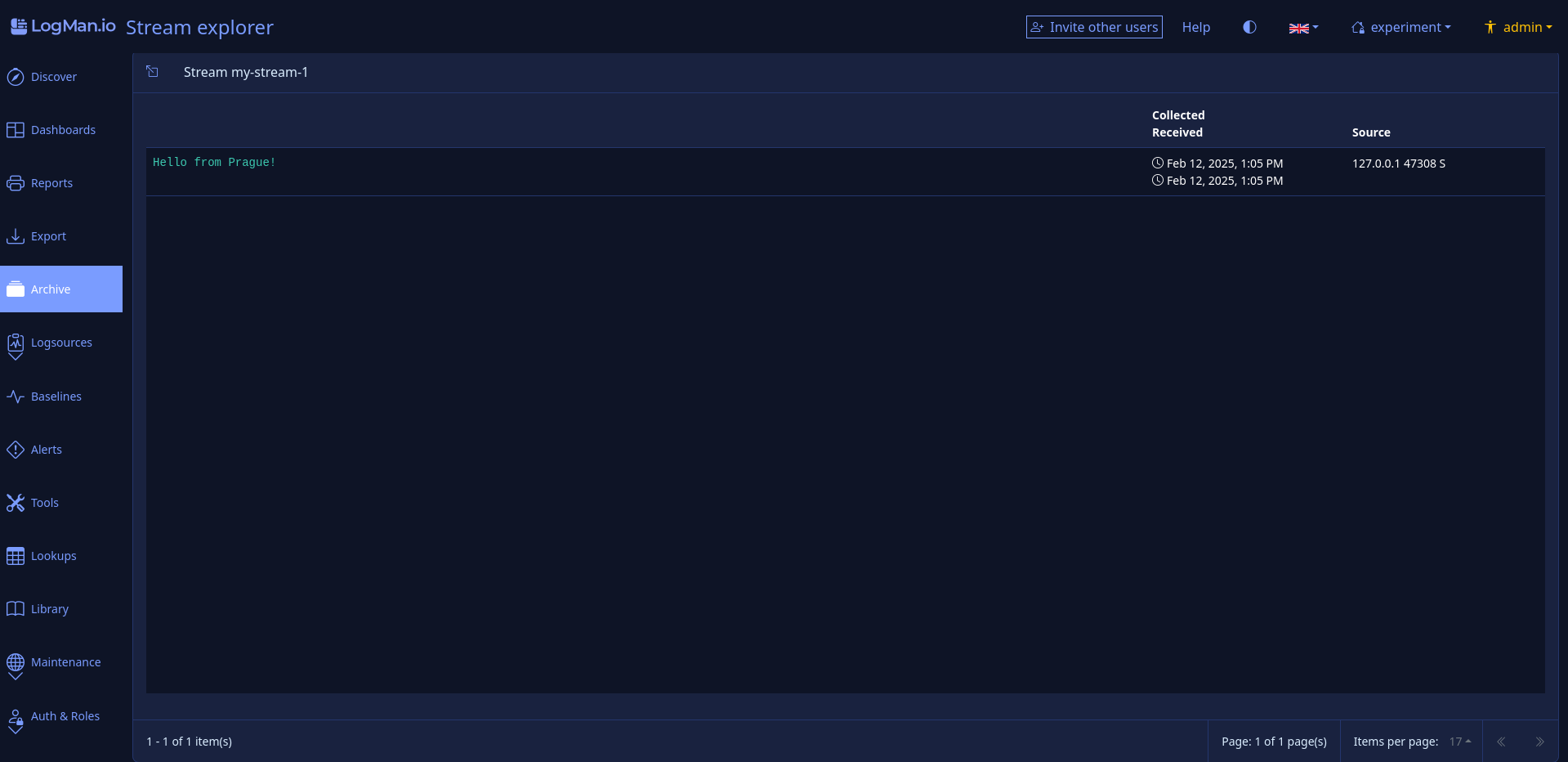

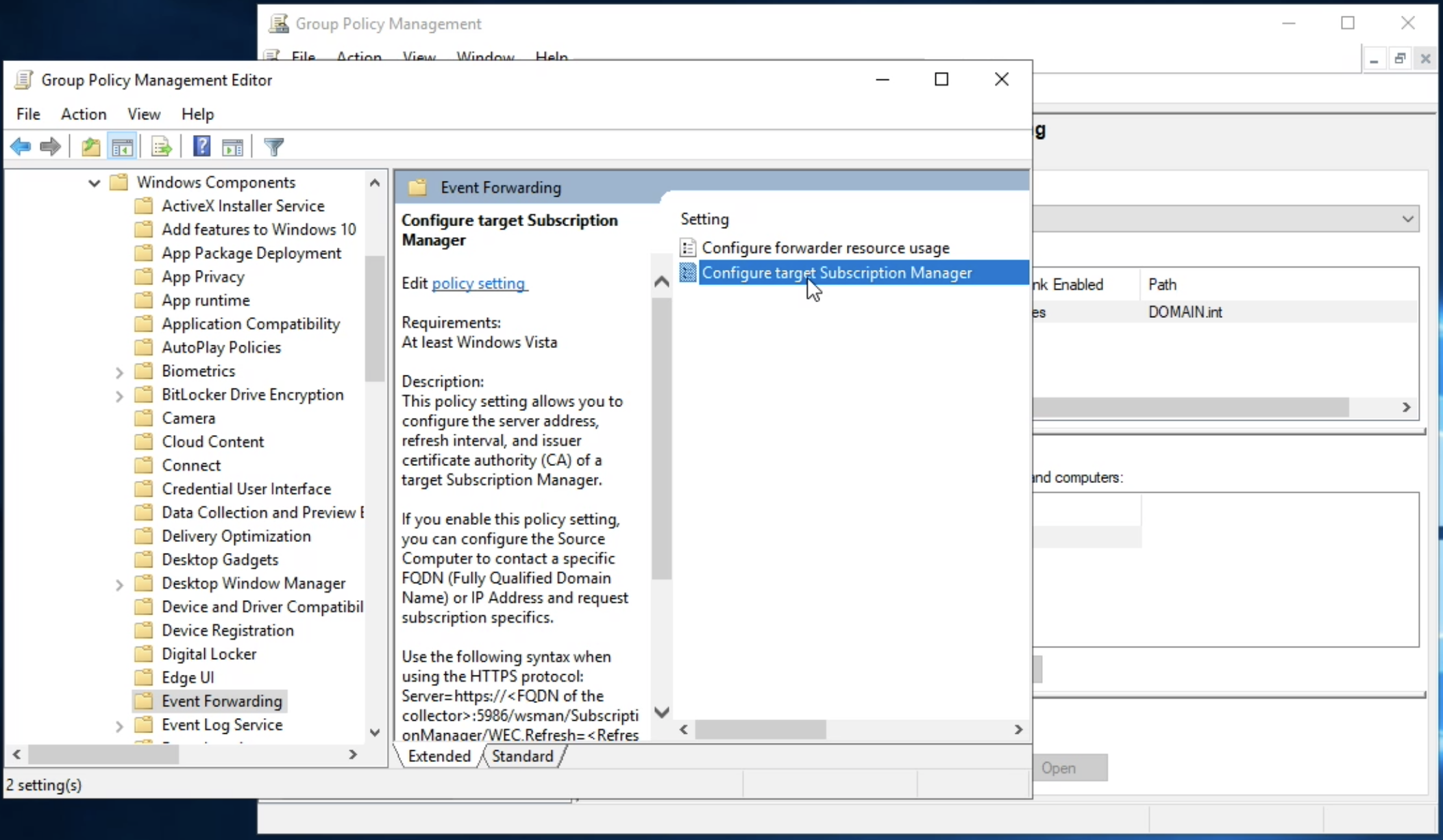

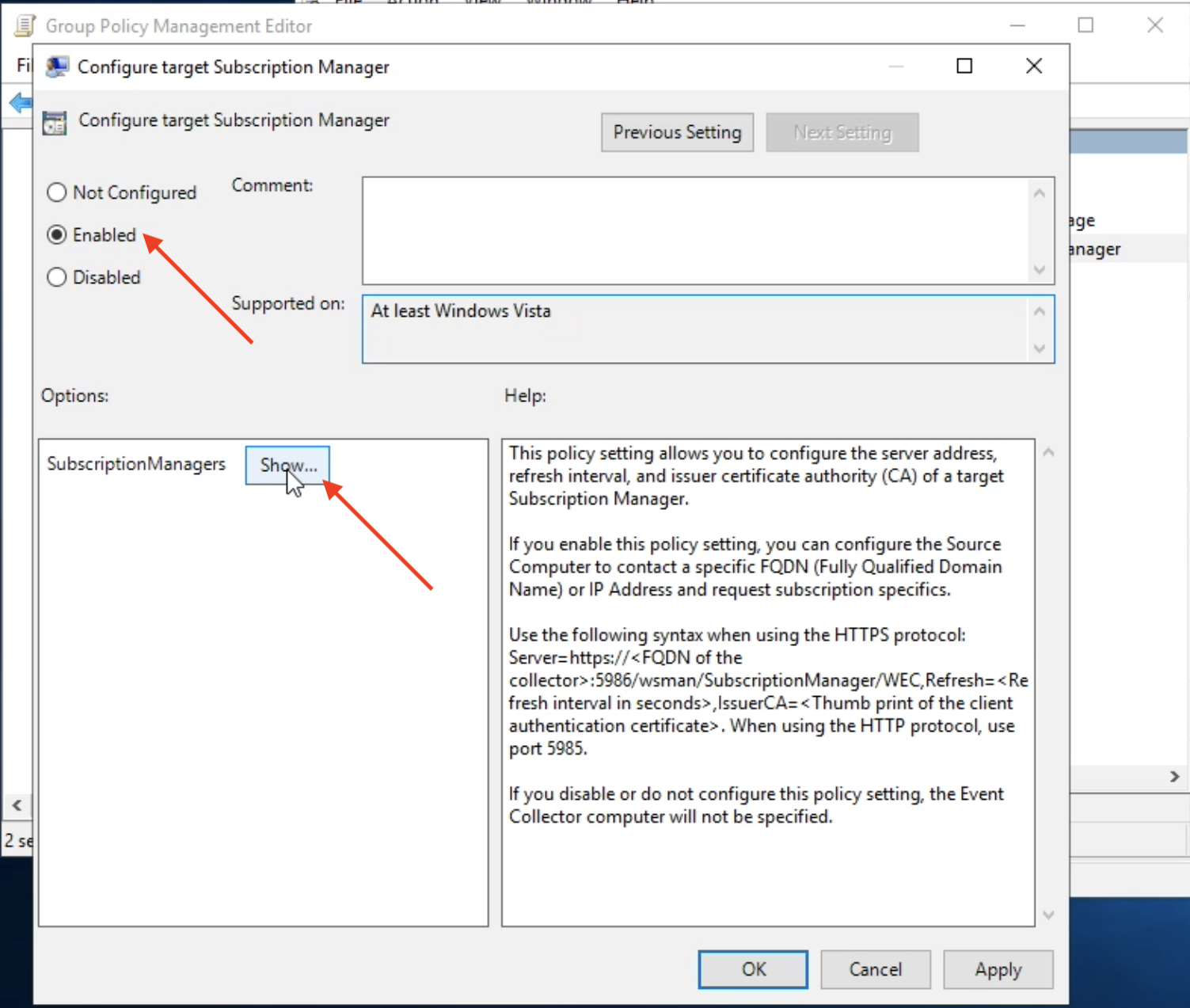

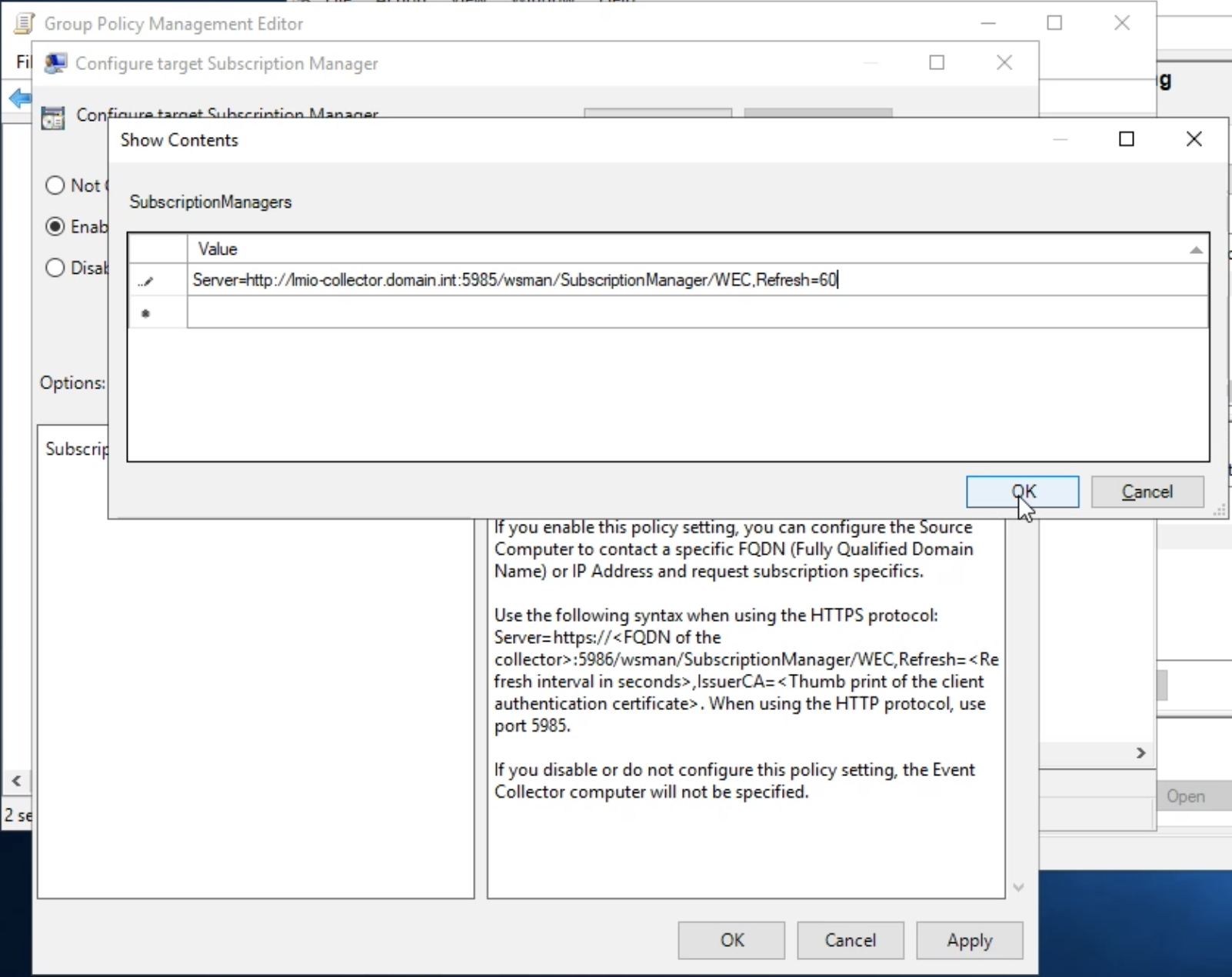

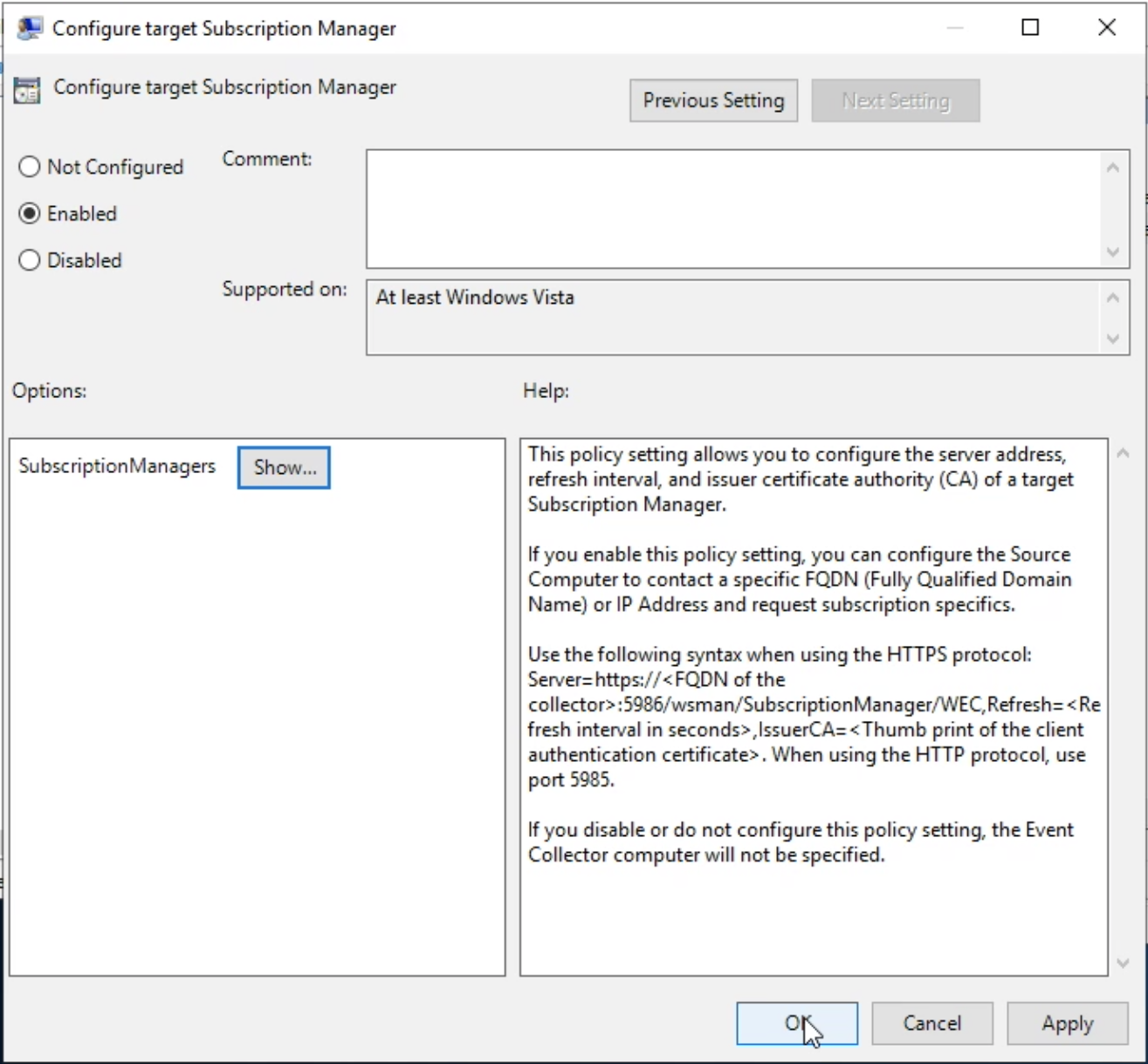

LogMan.io Collector serves to receive log lines from various sources such as SysLog NG, files, Windows Event Forwarding, databases via ODBC connectors and so on. The log lines may be further processed by a declarative processor and put into LogMan.io Ingestor via WebSocket.

lmio-ingestor¶

LogMan.io Ingestor receives events via WebSocket, transforms them to Kafka-readable format

and put them to Kafka collected- topic. There are multiple ingestors for different

event formats, such as SysLog, databases, XML and so on.

lmio-parser¶

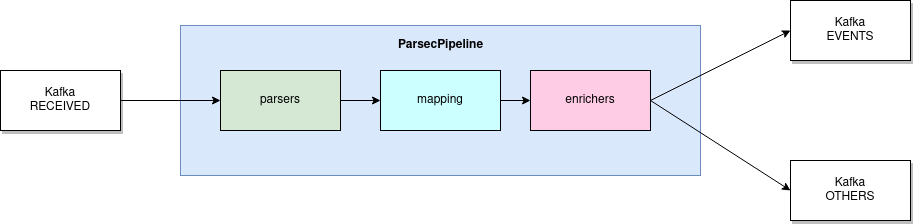

LogMan.io Parser runs in multiple instances to receive different formats of incoming events (different Kafka topics) and/or the same events (the instances then run in the same Kafka group to distribute events among them). LogMan.io Parser loads the LogMan.io Library via ZooKeeper or from files to load declarative parsers and enrichers from configured parsing groups.

If the events were parsed by the loaded parser, they are put to lmio-events Kafka topic, otherwise

they enter the lmio-others Kafka topic.

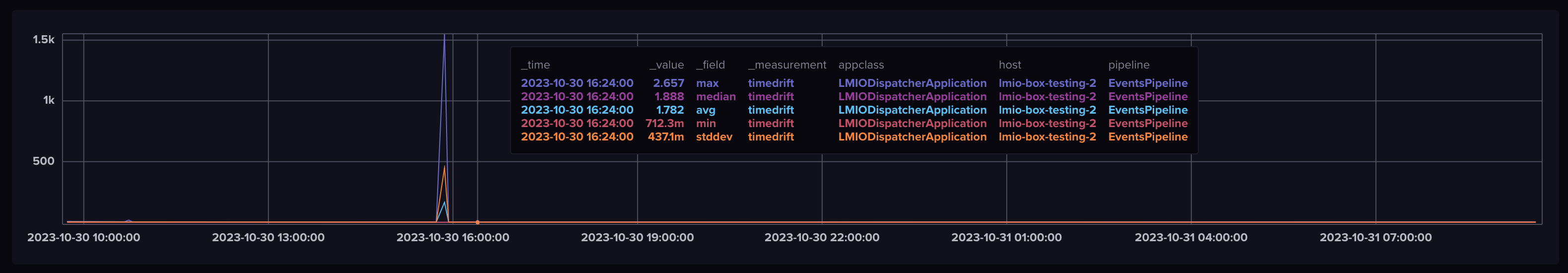

lmio-dispatcher¶

LogMan.io Dispatcher loads events from lmio-events Kafka topic and sends them both to all

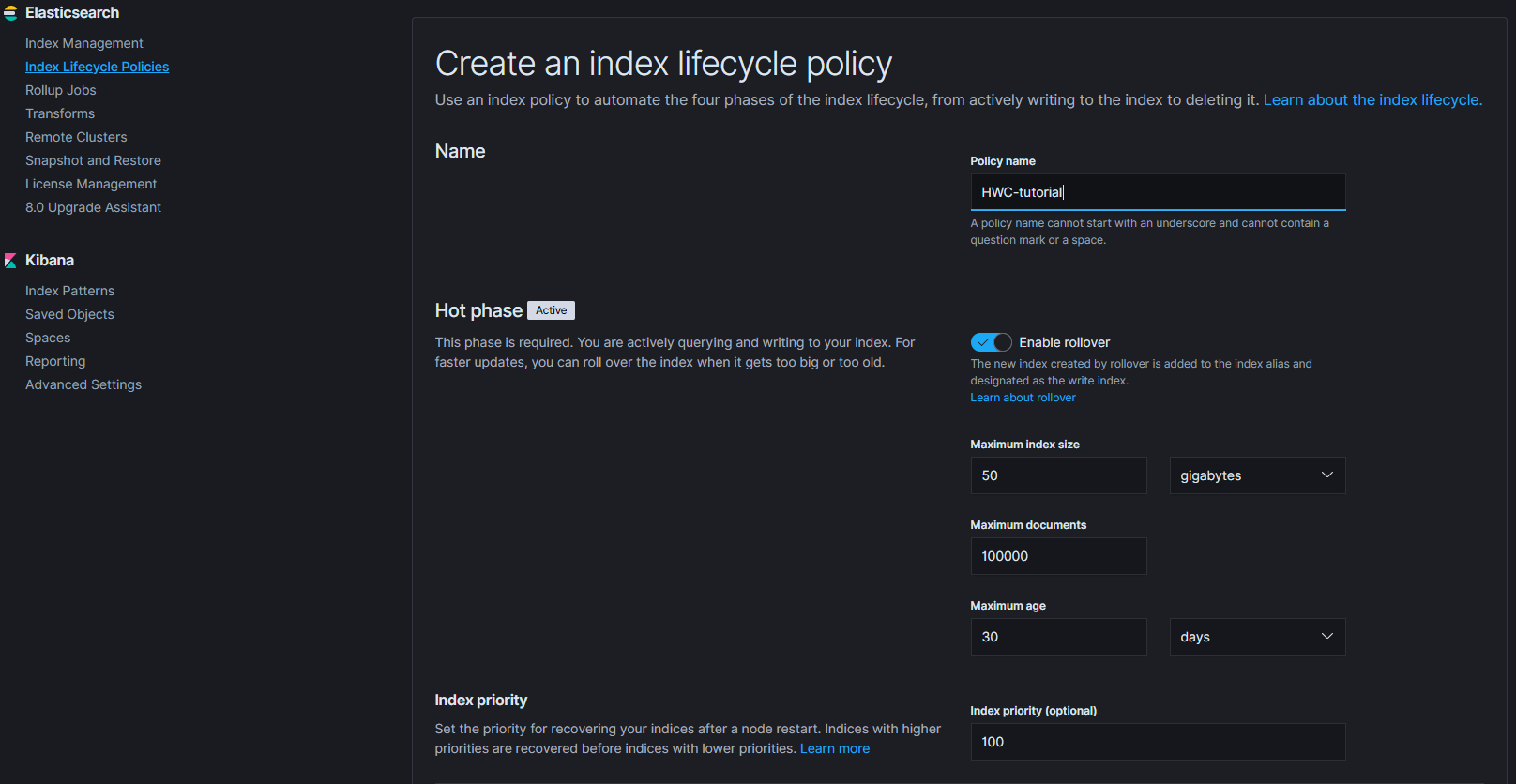

subscribed (via ZooKeeper) LogMan.io Correlator instances and ElasticSearch in the

appropriate index, where all events can be queried and visualized using Kibana.

LogMan.io Dispatcher runs in multiple instances as well.

lmio-correlator¶

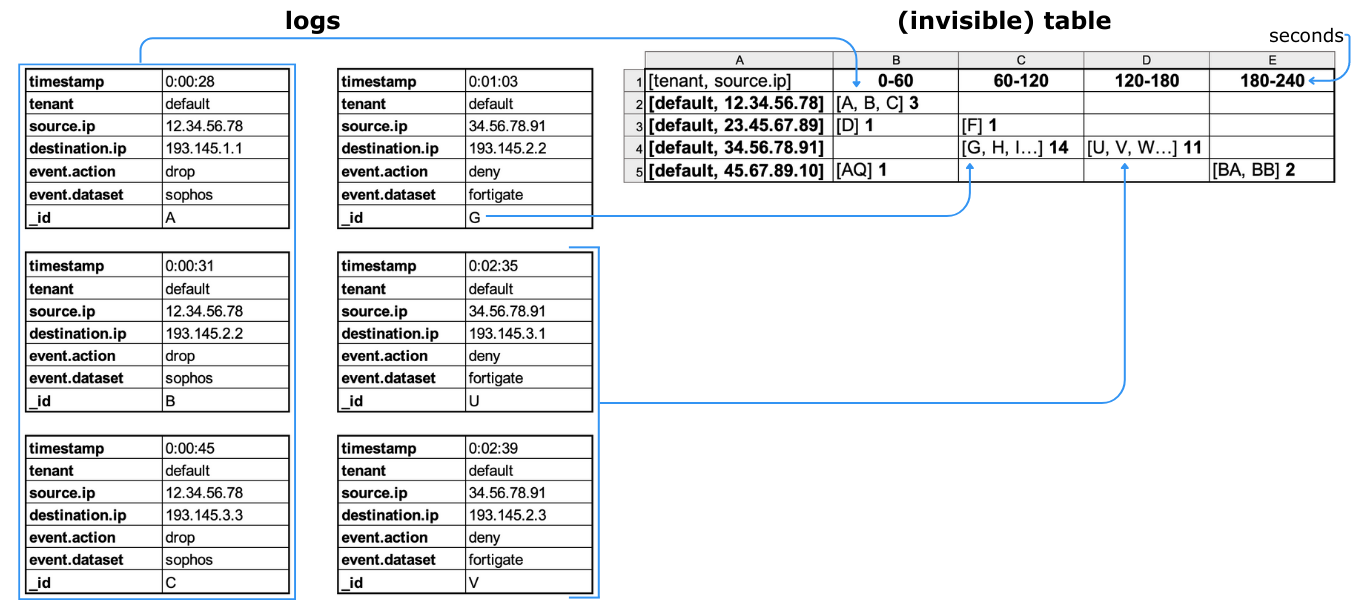

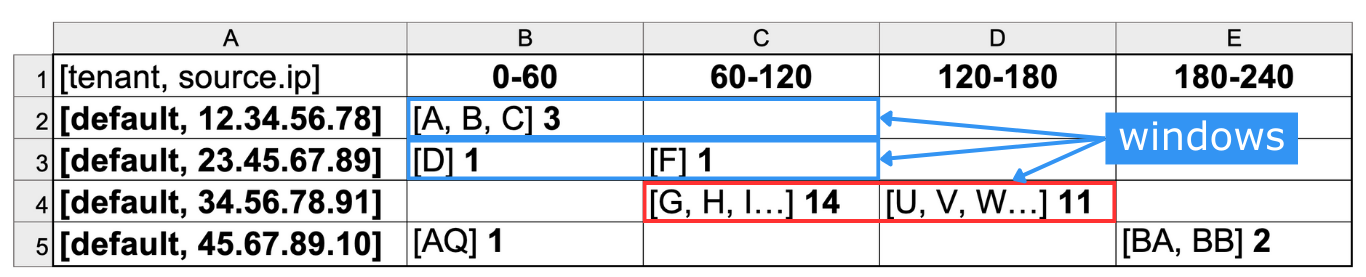

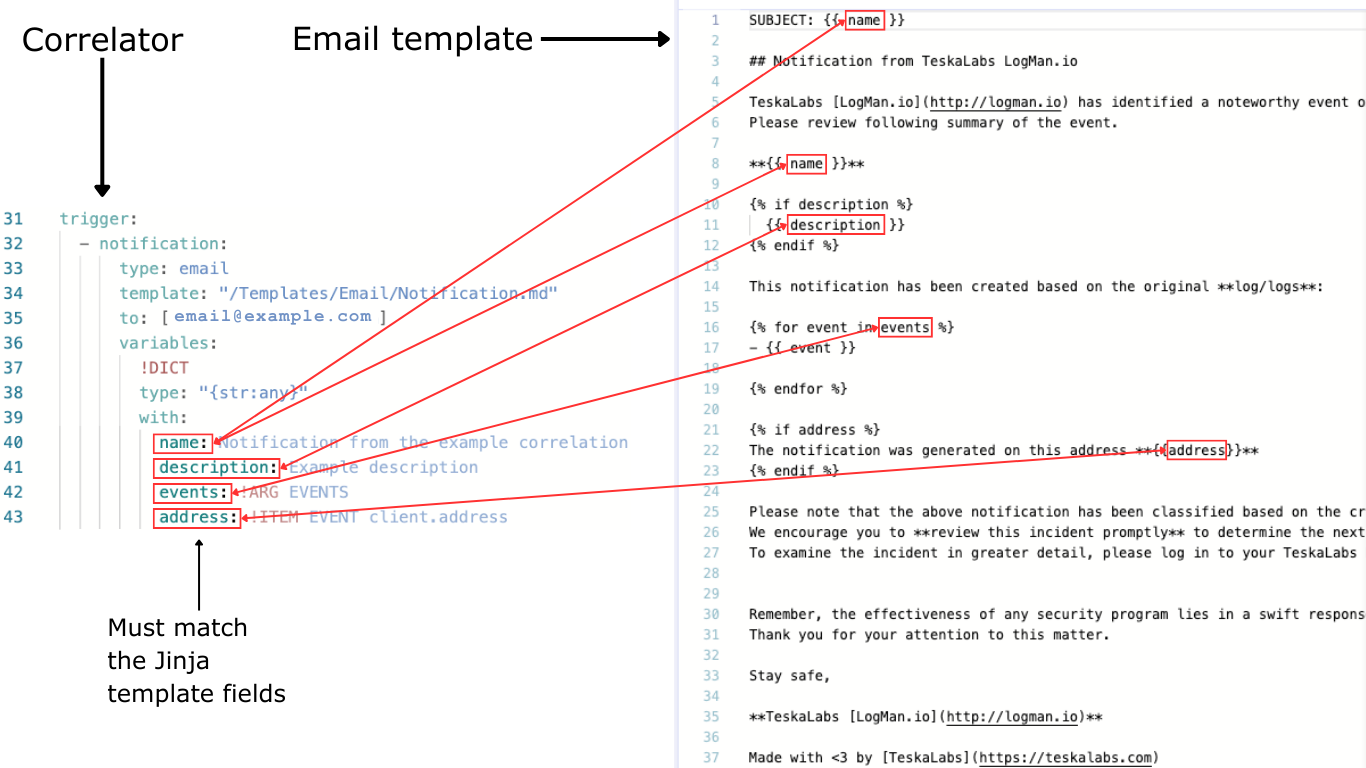

LogMan.io Correlator uses ZooKeeper to subscribe to all LogMan.io Dispatcher instances to receive parsed events (log lines etc.). Then LogMan.io Correlator loads the LogMan.io Library from ZooKeeper or from files to create correlators based on the declarative configuration. Events produced by correlators (Window Correlator, Match Correlator) are then handed down to LogMan.io Dispatcher and LogMan.io Watcher via Kafka.

lmio-watcher¶

LogMan.io Watcher observes changes in lookups used in LogMan.io Parsers and LogMan.io Correlators

instances. When a change occurs, all running components that use LogMan.io Library are notified

via Kafka topic lmio-lookups about the change and the lookup is updated in the ElasticSearch,

which serves as a persistent storage for all lookups.

lmio-integ¶

LogMan.io Integ allows LogMan.io to be integrated with supported external systems via expected message format and output/input protocol.

Support¶

Live help¶

Our team is available at our live support channel at Slack. You can message our internal experts directly, consult your plans, problems and challenges and even get online live help over share screen so that you don't need to be afraid of major upgrades and so on. The access is provided to customers with an active support plan.

Email support¶

Contact us at: support@teskalabs.com

Support hours¶

The 5/8 support level is available at working days based on Czech calendar, 09-18 Central European Time (Europe/Prague).

The 24/7 support level is also available, depending on your active support plan.

User Manual

Welcome¶

What's in the User Manual?

Here, you can learn how to use the TeskaLabs LogMan.io app. For information about setup, configuration, and maintenance, visit the Administration Manual or the Reference guide. If you can't find the help you need, contact Support.

Quickstart¶

Jump to:

- Get an overview of all events in your system (Home)

- Read incoming logs, and filter logs by field and time (Discover)

- View and filter your data as charts and graphs (Dashboards)

- View and print reports (Reports)

- Run, download, and manage exports (Export)

- Change your general or account settings

Some features are only visible to administrators, so you might not see all of the features that are included in the User Manual in your own version of TeskaLabs LogMan.io.

Administrator quickstart¶

Are you an administrator? Jump to:

- Add or edit files in the library, such as dashboards, reports, and exports (Library)

- Add or edit lookups (Lookups)

- Access external components that work with TeskaLabs LogMan.io (Tools)

- Change the configuration of your interface (Configuration)

- See microservices (Services)

- Manage user permissions (Auth)

Settings¶

Use these controls in the top right corner of your screen to change settings:

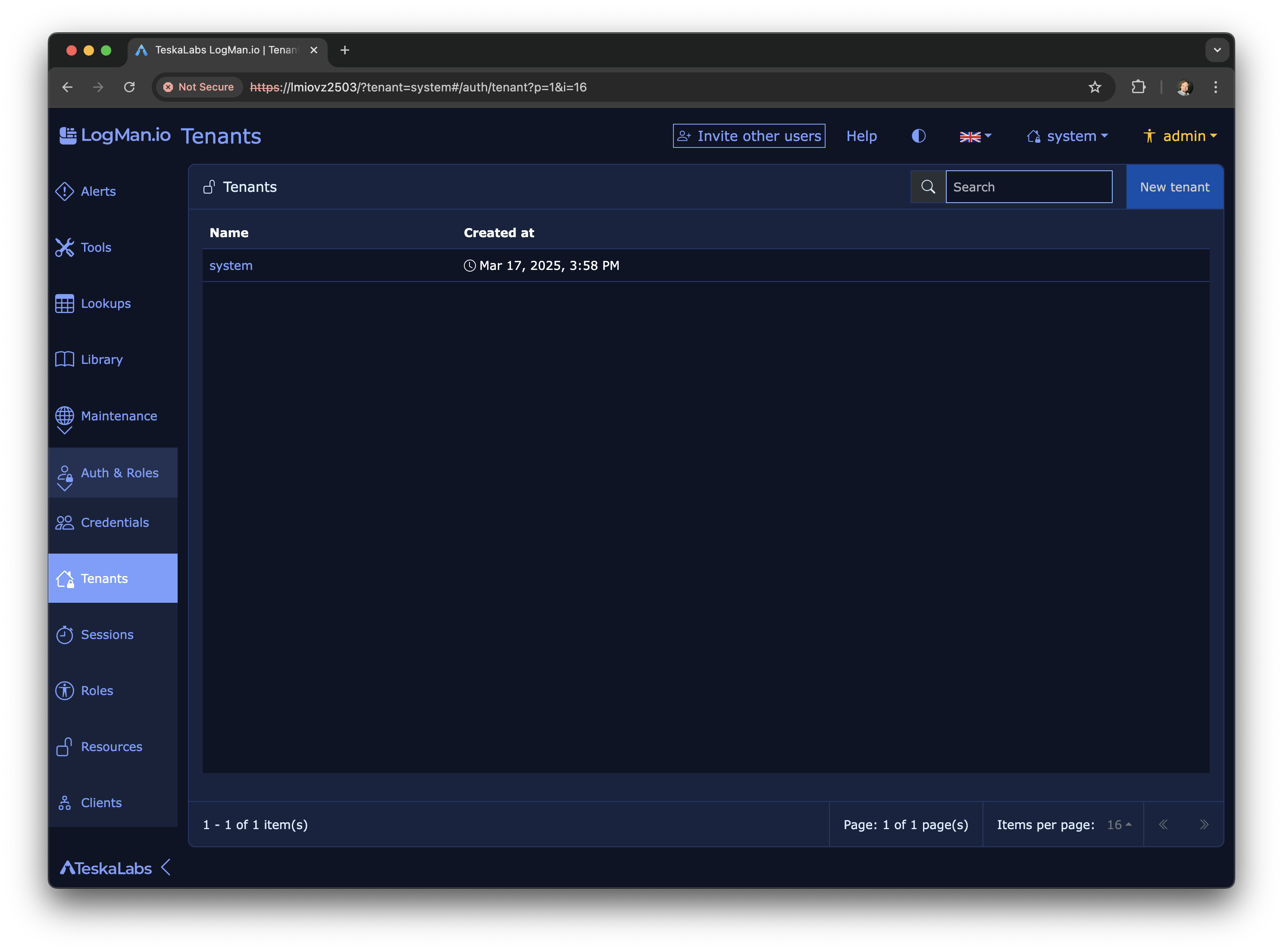

Tenants¶

A tenant is one entity collecting data from a group of sources. When you're using the program, you can only see the data belonging to the selected tenant. A tenant's data is completely separated from all other tenants' data in TeskaLabs LogMan.io (learn about multitenancy). Your company might have just one tenant, or possibly multiple tenants (for different departments, for example). If you're distributing or managing TeskaLabs LogMan.io for other clients, you have multiple tenants, at least one per client.

Tenants can be accessible by multiple users, and users can have access to multiple tenants. Learn more about tenancy here.

Tips¶

If you're new to log collection, click on the tip boxes to learn why you might want to use a feature.

Why use TeskaLabs LogMan.io?

TeskaLabs LogMan.io collects logs, which are records of every single event in your network system. This information can help you:

- Understand what's happening in your network

- Troubleshoot network problems

- Investigate security issues

Managing your account¶

Your account name is at the top right corner of your screen:

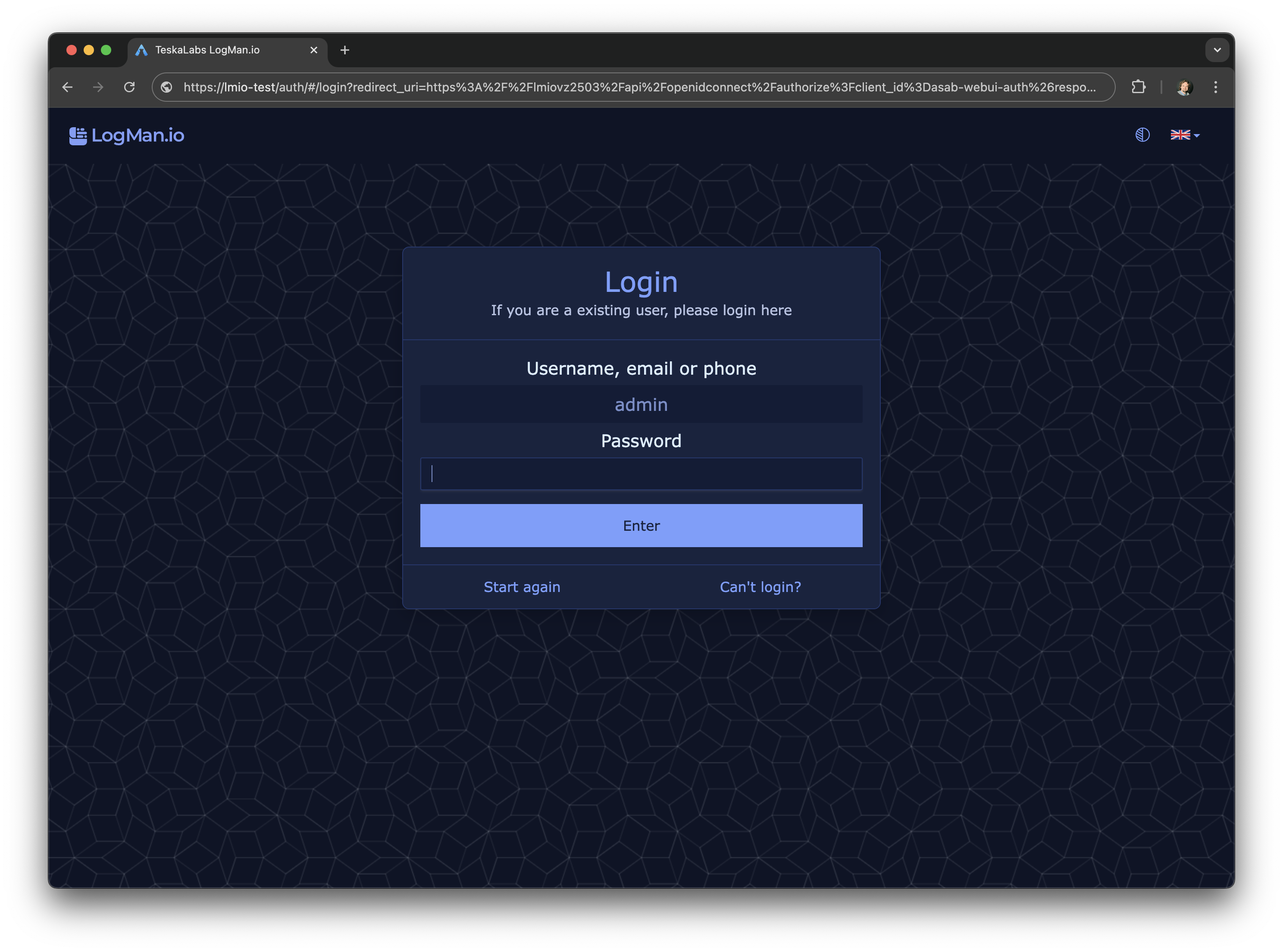

Changing your password¶

- Click on your account name.

- Click Change a password.

- Enter your current password and new password.

- Click Set password.

You should see confirmation of your password change. To return to the page you were on before changing your password, click Go back.

Changing account information¶

- Click on your account name.

- Click Manage.

- Here you can:

- Change your password

- Change your email address

- Change or add your phone number

- Log out

- Click on what you want to do, and make your changes. The changes won't be visible immediately - they'll be visible when you log out and log back in.

Seeing your access permissions¶

- Click on your account name.

- Click Access control, and you'll see what permissions you have.

Logging out¶

- Click on your account name.

- Click Logout.

You can also log out from the Manage screen.

Logging out from all devices¶

- Click on your account name.

- Click Manage.

- Click Logout from all devices.

When you log out, you'll be automatically taken to the login screen.

Using the Home page¶

The Home page gives you an overview of your data sources and critical incoming events. You'll be on the Home page by default when you log in, but you can also get to the Home page from the buttons on the left.

Viewing options¶

Chart and list view¶

To switch between chart and list view, click the list button.

Getting more details¶

Clicking on any portion of a chart takes you to Discover, where you then see the list of logs that make up this portion of the chart. From there, you can examine and filter these logs.

You can see here that Discover is automatically filtering for events from the selected dataset (from the chart on the Home page), event.dataset:devolutions.

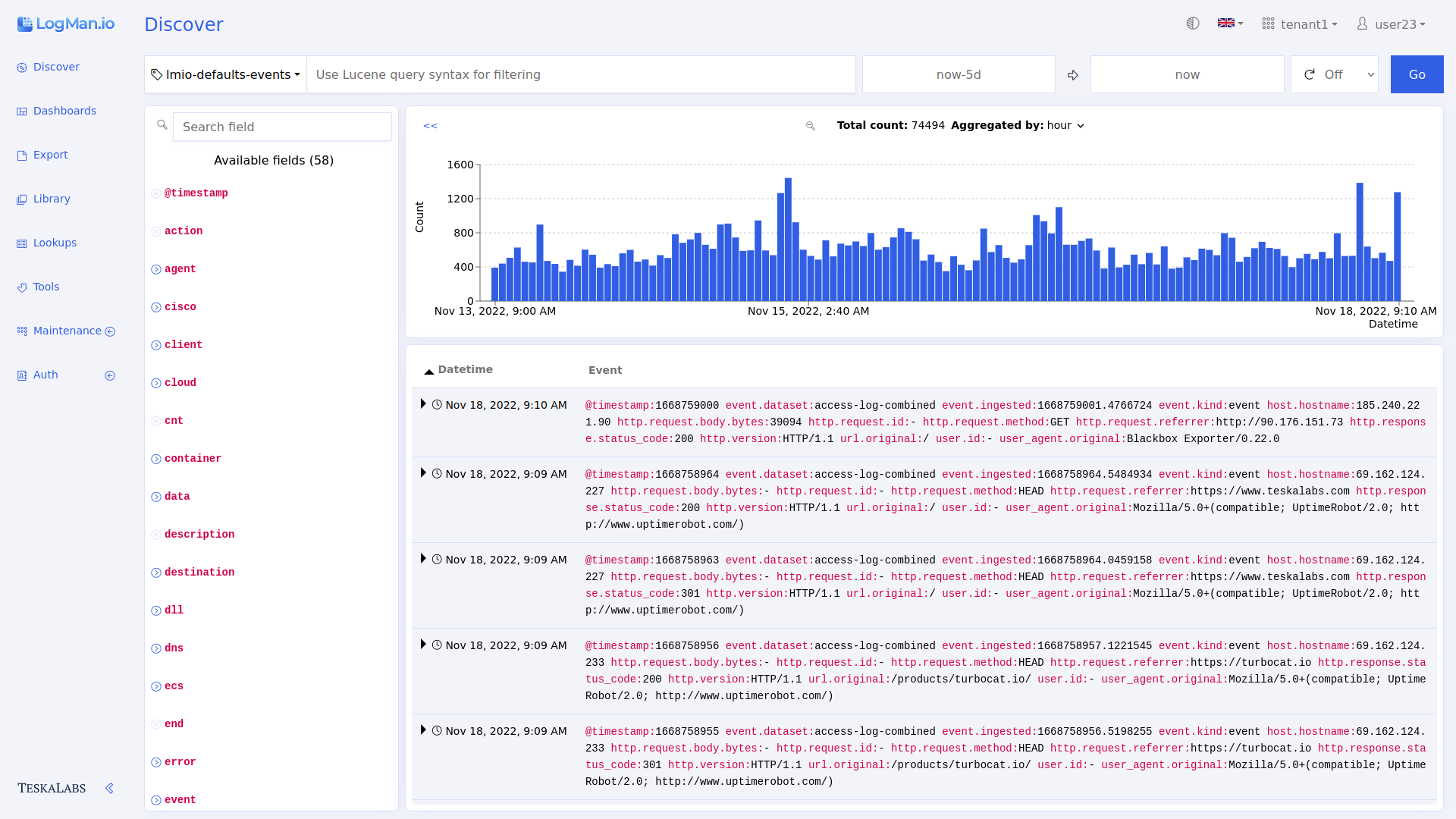

Using Discover¶

Discover gives you an overview of all logs being collected in real time. Here, you can filter the data by time and field.

Navigating Discover¶

Terms¶

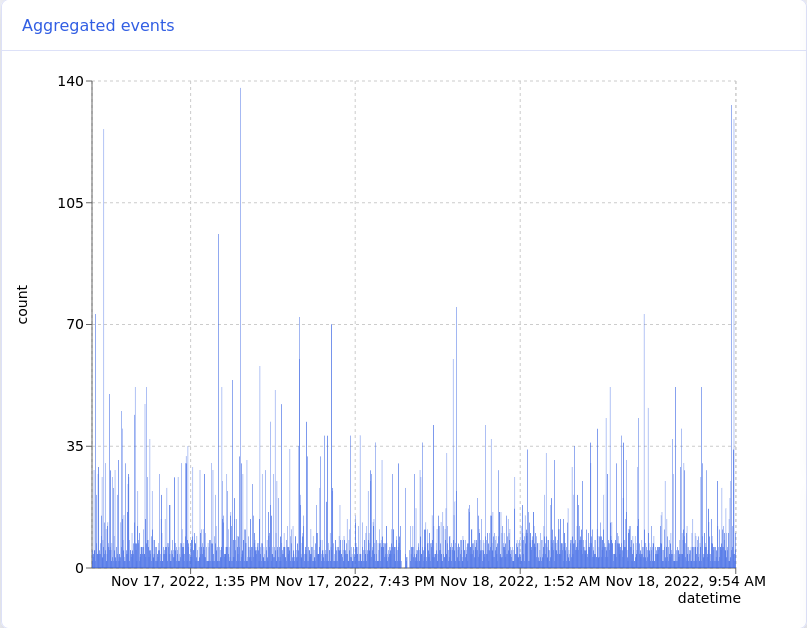

Total count: The total number of logs in the timeframe being shown.

Aggregated by: In the bar chart, each bar represents the count of logs collected within a time interval. Use Aggregated by to choose the time interval. For example, Aggregated by: 30m means that each bar in the bar chart shows the count of all of the logs collected in a 30 minute timeframe. If you change to Aggregated by: hour, then each bar represents one hour of logs. The available options change based on the overall timeframe you are viewing in Discover.

Filtering data¶

Change the timeframe from which logs appear, and filter logs by field.

Tip: Why filter data?

Logs contain a lot of information, more than you need to accomplish most tasks. When you filter data, you choose which information you see. This can help you learn more about your network, identify trends, and even hunt for threats.

Examples:

- You want to see login data from just one user, so you filter the data to show logs containing their username.

- You had a security event on Wednesday night, and you want to learn more about it, so you filter the data to show logs from that time period.

- You notice you don't see any data from one of your network devices. You can filter the data to see all the logs from just that device. Now, you can see when the data stopped coming, and what the last event was that might have caused the problem.

Changing the timeframe¶

You can view logs from a specified timeframe. Set the timeframe by choosing start and end points using this tool:

Remember: Once you change the timeframe, press the blue refresh button to update your page.

Using the time setting tool¶

Setting a relative start/end point¶

To set the start or end point to a time relative to now, use the Relative tab.

Quick time settings

Use the quick now- ("now minus") options to set the timeframe to a preset with one click. Selecting one of these options affects both the start and end point. For example, if you choose now-1 week, the start point will be one week ago, and the end point will be "now." Choosing a now- option from the end point does the same thing as choosing a now- option from the start point. (You can't use the now- options to set the end point to anything besides "now.")

Drop-down options

To set a relative time (such as 15 minutes ago) for the start or end point, use the relative time options below the quick setting options. Select your unit of time from the drop-down list, and type or click to your desired number.

To confirm your choice, click Set relative time, and view the logs by clicking on the refresh button.

Example shown: This selection will show logs collected starting from one day ago until now.

Setting an exact start/end point¶

To choose the exact day and time for the start or end point, use the Absolute tab and select a date and time on the calendar.

To confirm your choice, click Set date.

Example shown: This selection will show logs collected starting from June 7, 2023 at 6:00 until now.

Auto refresh¶

To update the view automatically at a set time interval, choose a refresh rate:

Refresh¶

To reload the view with your changes, click the blue refresh button.

Note: Don't choose "Now" as your start point. Since the program can't show data newer than "now," it's not valid, so you'll see an error message.

Using the time selector¶

To select a more specific time period within the current timeframe, click and drag on the graph.

Filtering by field¶

In Discover, you can filter data by any field in multiple ways.

Using the field list¶

Use the search bar to find the field you want, or scroll through the list.

Isolating fields¶

To choose which fields you see in the log list, click the + symbol next to the field name. You can select multiple fields.

Seeing all occuring values in one field¶

To see a percentage breakdown of all the values from one field, click the magnifying glass next to the field name (the magnifying glass appears when you hover over the field name).

Tip: What does this mean?

This list of values from the field http.response.status_code compares how often users are getting certain http response codes. 51.4% of the time, users are getting a 404 code, meaning the webpage wasn't found. 43.3% of the time, users are getting a 200 code, which means that the request succeeded. The high percentage of "not found" response codes could inform a website administrator that one or more of their frequently clicked links are broken.

Viewing and filtering log details¶

To view the details of individual logs as a table or in JSON, click the arrow next to the timestamp. You can apply filters using the field names in the table view.

Filtering from the expanded table view¶

You can use controls in the table view to filter logs:

Filter for logs that contain the same value in the selected field (update_item in action in the example)

Filter for logs that do NOT contain the same value in the selected field (update_item in action in the example)

Show a percentage breakdown of values in this field (the same function as the magnifying glass in the fields list on the left)

Add to list of displayed fields for all visible logs (the same function as in the fields list on the left)

Query bar¶

You can filter for field (not time) using the query bar. The query bar tells you which query language to use. The query language depends on your data source. Use Lucene Query Syntax for data stored using ElasticSearch.

After you type your query, set the timeframe and click the refresh button. Your filters will be applied to the visible incoming logs.

Investigating IP addresses¶

You can investigate IP addresses using external analysis tools. You might want to do this, for example, if you see multiple suspicious logins from one IP address.

Using external IP analysis tools

1. Click on the IP address you want to analyze.

2. Click on the tool you want to use. You'll be taken to the tool's website, where you can see the results of the IP address analysis.

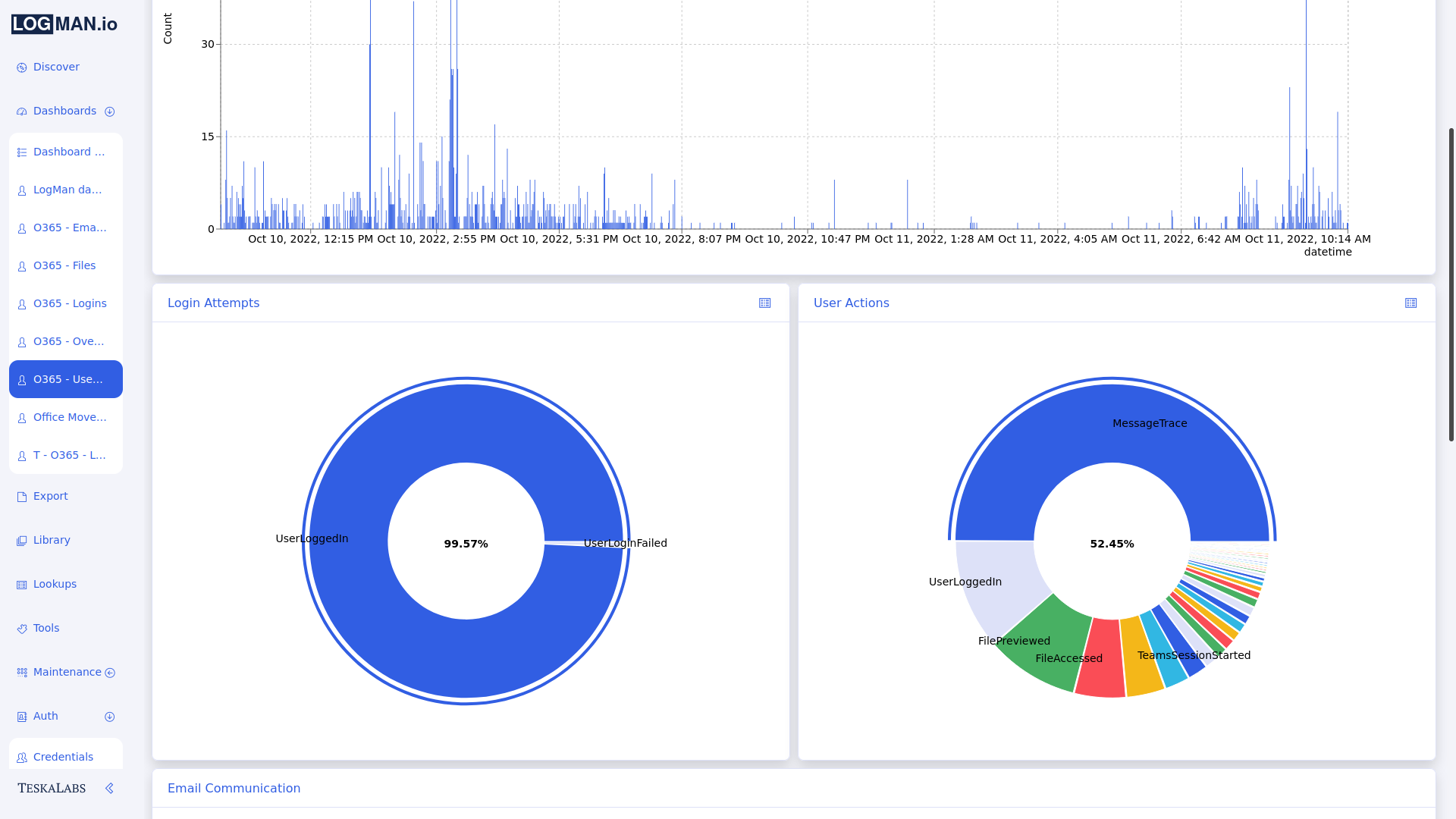

Using Dashboards¶

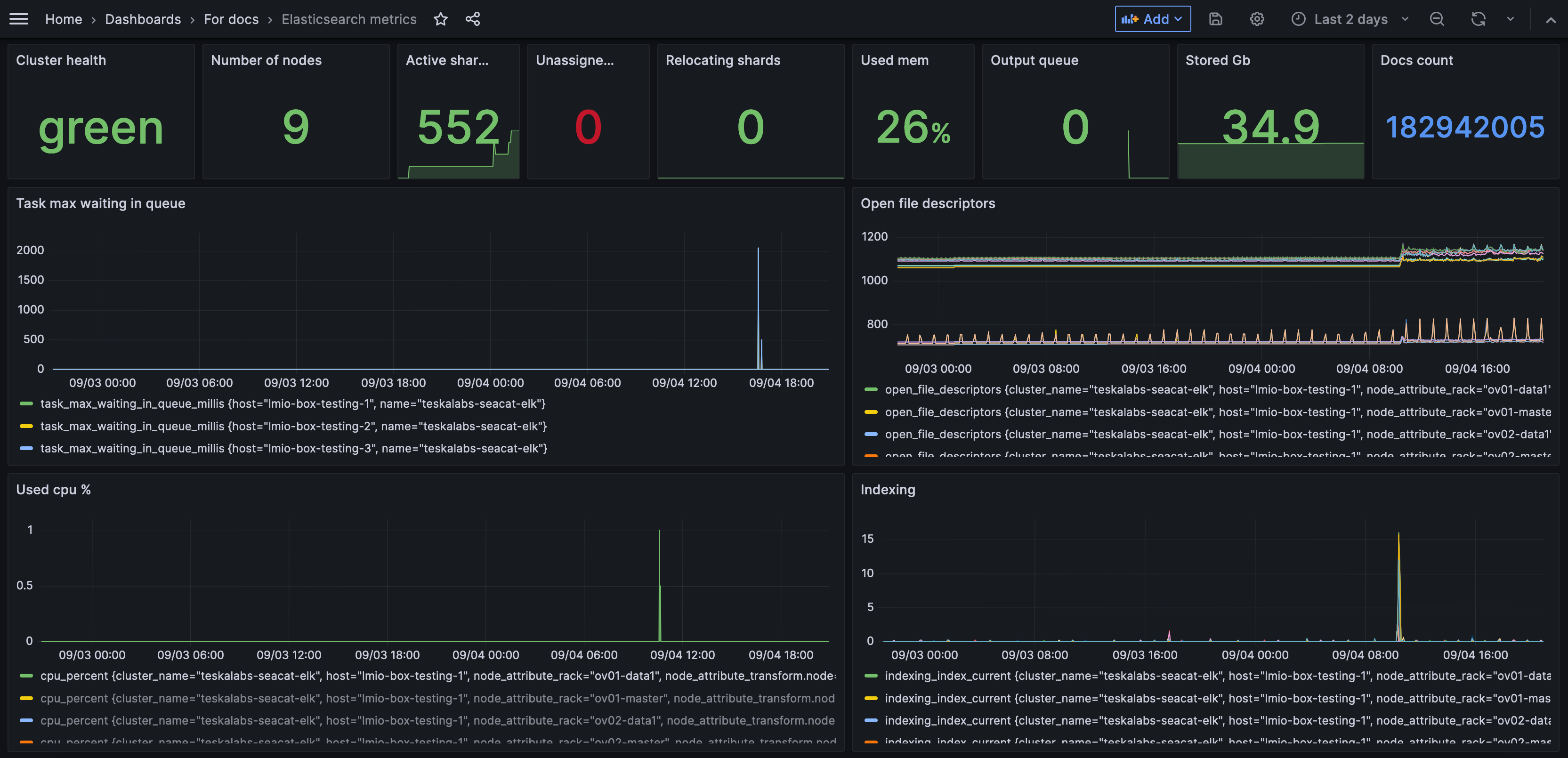

A dashboard is a set of charts and graphs that represent data from your system. Dashboards allow you to quickly get a sense for what's going on in your network.

Your administrator sets up dashboards based on the data sources and fields that are most useful to you. For example, you might have a dashboard that shows graphs related only to email activity, or only to login attempts. You might have many dashboards for different purposes.

You can filter the data to change which data the dashboard shows within its preset constraints.

How can dashboards help me?

By having certain data arranged into a chart, table, or graph, you can get a visual overview of activity within your system and identify trends.

In this example, you can see that a high volume of emails were sent and received on June 19th.

Navigating Dashboards¶

Opening a dashboard¶

To open a dashboard, click on its name.

Dashboard controls¶

Setting the timeframe¶

You can change the timeframe the dashboard represents. Find the time-setting guide here. To refresh the dashboard with your new timeframe, click on the refresh button.

Note: There is no auto-refresh rate in Dashboards.

Filtering dashboard data¶

To filter the data the dashboard shows, use the query bar. The query language you need to use depends on your data source. The query bar tells you which query language to use. Use Lucene Query Syntax for data stored using ElasticSearch.

Moving widgets¶

You can reposition and resize each widget. To move widgets, click on the dashboard menu button and select Edit.

To move a widget, click anywere on the widget and drag. To resize a widget, click on the widget's bottom right corner and drag.

To save your changes, click the green save button. To cancel the changes, click the red cancel button.

Printing dashboards¶

To print a dashboard, click on the dashboard menu button and select Print. Your browser opens a window, and you can choose your print settings there.

Reports¶

Reports are printer-friendly visual representations of your data, like printable dashboards. Your administrator chooses what information goes into your reports based on your needs.

Find and print a report¶

- Select the report from your list, or use the search bar to find your report.

- Click Print. Your browser opens a print window where you can choose your print settings.

Using Export¶

Turn sets of logs into downloadable, sendable files in Export. You can keep these files on your computer, inspect them in another program, or send them via email.

What is an export?

An export is not a file, but a process that creates a file. The export contains and follows your instructions for which data to put in the file, what type of file to create, and what to do with the file. When you run the export, you create the file.

Why would I export logs?

Being able to see a group of logs in one file can help you inspect the data more closely. A few reasons you might want to export logs are:

- To investigate an event or attack

- To send data to an analyst

- To explore the data in a program outside TeskaLabs LogMan.io

Navigating Export¶

List of exports

The List of exports shows you all the exports that have been run.

From the list page, you can:

- See an export's details by clicking on the export's name

- Download the export by clicking on the cloud beside its name

- Delete the export by clicking on the trash can beside its name

- Search for exports using the search bar

Export status is color-coded:

- Green: Completed

- Yellow: In progress

- Blue: Scheduled

- Red: Failed

Jump to:¶

Run an export¶

Running an export adds the export to your List of exports, but it does not automatically download the export. See Download an export for instructions.

Run an export based on a preset¶

1. Click New on the List of exports page. Now you can see the preset exports:

2. To run a preset export, click the run button beside the export name.

OR

2. To edit the export before running, click on the edit button beside the export name. Make your changes, and then click Start. (Use this guide to learn about making changes.)

Once you run the export, you are automatically brought back to the list of exports, and your export appears at the top of the list.

Note

Export presets are created by administrators.

Run an export based on an export you've run before¶

You can re-run an export. Running an export again does not overwrite the original export.

1. On the List of exports page, click on the name of the export you want to run again.

2. Click Restart.

3. You can make changes here (see this guide) or run as-is.

4. Click Start.

Once you run the export, you are automatically brought back to the list of exports, and your new export appears at the top of the list.

Create a new export¶

Create an export from a blank form¶

1. In List of exports, click New, then click Custom.

2. Fill in the fields.

Note

The options in the drop down menus might change based on the selections you make.

Name

Name the export.

Data Source

Select your data source from the drop-down list.

Output

Choose the file type for your data. It can be:

- Raw: If you want to download the export and import the logs into different software, choose raw. If the data source is Elasticsearch, the raw file format is .json.

- .csv: Comma-separated values

- .xlsx: Microsoft Excel format

Compression

Choose to zip your export file, or leave it uncompressed. A zipped file is compressed, and therefore smaller, so it's easier to send, and it takes up less space in your computer.

Target

Choose the target for your file. It can be:

- Download: A file you can download to your computer.

- Email: Fill in the email fields. When you run the export, the email sends. You can still download the data file any time in the List of exports.

- Jupyter: Saves the file in the Jupyter notebook, which you can access through the Tools page. You need to have administrator permissions to access the Jupyter notebook, so only choose Jupyter as the target if you're an administrator.

Separator

If you select .csv as your output, choose what character will mark the separation between each value in each log. Even though CSV means comma-separated values, you can choose to use a different separator, such as a semicolon or space.

Schedule (optional)¶

To schedule the export, rather than running it immediately, click Add schedule.

-

Schedule once:

- To run the export one time at a future time, type the desired date and time in

YYYY-MM-DD HH:mmformat, for example2023-12-31 23:59(December 31st, 2023, at 23:59).

- To run the export one time at a future time, type the desired date and time in

-

Schedule a recurring export:

-

To set up the export to run automatically on a regular schedule, use

cronsyntax. You can learn more aboutcronfrom Wikipedia, and use this tool and these examples by Cronitor to help you writecronexpressions. -

The Schedule field also supports random

Rusage and Vixie cron-style@keyword expressions.

-

Query

Type a query to filter for certain data. The query determines which data to export, including the timeframe of the logs.

Warning

You must include a query in every export. If you run an export without a query, all of the data stored in your program will be exported with no filter for time or content. This could create an extremely large file and put strain on data storage components, and the file likely won't be useful to you or to analysts.

If you accidentally run an export without a query, you can delete the export while it's still running in the List of exports by clicking on the trash can button.

TeskaLabs LogMan.io uses the Elasticsearch Query DSL (Domain Specific Language).

Here's the full guide to the Elasticsearch Query DSL.

Example of a query:

{

"bool": {

"filter": [

{

"range": {

"@timestamp": {

"gte": "now-1d/d",

"lt": "now/d"

}

}

},

{

"prefix": {

"event.dataset": {

"value": "microsoft-office-365"

}

}

}

]

}

}

Query breakdown:

bool: This tells us that the whole query is a Boolean query, which combines mutliple conditions such as "must," "should," and "must not" Here, it's using filter to find characteristics the data must have to make it into the export. filter can have mutliple conditions.

range is the first filter condition. Since it refers to the field below it, which is @timestamp, it will filter for logs based on a range of values in the timestamp field.

@timestamp tells us that the query is filtering for time, so it will export logs from a certain timeframe.

gte: This means "greater than or equal to," which is set to the value now-1d/d, meaning the earliest timestamp (the first log) will be from exactly one day ago at the moment you run the export.

lt means "less than," and it is set to now/d, so the latest timestamp (the last log) will be the newest at the moment you run the export ("now").

prefix is the second filter condition. It looks for logs where the value of a field, in this case event.dataset, starts with microsoft-office-365.

So, what does this query mean?

This export will show all logs from Microsoft Office 365 from the last 24 hours.

3. Add columns

For .csv and .xlsx files, you need to specify what columns you want to have in your document. Each column represents a data field. If you don't specify any columns, the resulting table will have all possible columns, so the table might be much bigger than you expect or need it to be.

You can see the list of all available data fields in Discover. To find which fields are relevant to the logs you're exporting, inspect an individual log in Discover.

- To add a column, click Add. Type the name of the column.

- To delete a column, click -.

- To reorder the columns, click and drag the arrows.

Warning

Pressing enter after typing a column name will run the export.

This example was downloaded from the export shown above as a .csv file, then separated into columns using the Microsoft Excel Convert Text to Columns Wizard. You can see that the columns here match the columns specified in the export.

4. Run the export by pressing Start.

Once you run the export, you are automatically brought back to the list of exports, and your export appears at the top of the list.

Download an export¶

1. On the List of exports page, click on the cloud button to download.

OR

1. On the List of exports page, click on the export's name.

2. Click Download.

Your browser should automatcially start a download.

Delete an export¶

1. On the List of exports page, click on the trash can button.

OR

1. On the List of exports page, click on the export's name.

2. Click Remove.

The export should disappear from your list.

Add an export to your library¶

Note

This feature is only available to administrators.

If you like an export you've created or edited, you can save it to your library as a preset for future use.

1. On the List of exports page, click on the export's name.

2. Click Save to Library.

When you click on New from the List of exports page, your new export preset should be in the list.

All features

Home page¶

The Home page gives you an overview of your data sources and critical incoming events.

Viewing options¶

Chart and list view¶

To switch between chart and list view, click the list button.

Getting more details¶

Clicking on any portion of a chart takes you to Discover, where you then see the list of logs that make up this portion of the chart. From there, you can examine and filter these logs.

You can see here that Discover is automatically filtering for events from the selected dataset (from the chart on the Home page), event.dataset:devolutions.

Discover¶

Discover gives you an overview of all logs being collected in real time. Here, you can filter the data by time and field.

Navigating Discover¶

Terms¶

Total count: The total number of logs in the timeframe being shown.

Aggregated by: In the bar chart, each bar represents the count of logs collected within a time interval. Use Aggregated by to choose the time interval. For example, Aggregated by: 30m means that each bar in the bar chart shows the count of all of the logs collected in a 30 minute timeframe. If you change to Aggregated by: hour, then each bar represents one hour of logs. The available options change based on the overall timeframe you are viewing in Discover.

Filtering data¶

Change the timeframe from which logs appear, and filter logs by field.

Tip: Why filter data?

Logs contain a lot of information, more than you need to accomplish most tasks. When you filter data, you choose which information you see. This can help you learn more about your network, identify trends, and even hunt for threats.

Examples:

- You want to see login data from just one user, so you filter the data to show logs containing their username.

- You had a security event on Wednesday night, and you want to learn more about it, so you filter the data to show logs from that time period.

- You notice you don't see any data from one of your network devices. You can filter the data to see all the logs from just that device. Now, you can see when the data stopped coming, and what the last event was that might have caused the problem.

Changing the timeframe¶

You can view logs from a specified timeframe. Set the timeframe by choosing start and end points using this tool:

Remember: Once you change the timeframe, press the blue refresh button to update your page.

Using the time setting tool¶

Setting a relative start/end point¶

To set the start or end point to a time relative to now, use the Relative tab.

Quick time settings

Use the quick now- ("now minus") options to set the timeframe to a preset with one click. Selecting one of these options affects both the start and end point. For example, if you choose now-1 week, the start point will be one week ago, and the end point will be "now." Choosing a now- option from the end point does the same thing as choosing a now- option from the start point. (You can't use the now- options to set the end point to anything besides "now.")

Drop-down options

To set a relative time (such as 15 minutes ago) for the start or end point, use the relative time options below the quick setting options. Select your unit of time from the drop-down list, and type or click to your desired number.

To confirm your choice, click Set relative time, and view the logs by clicking on the refresh button.

Example shown: This selection will show logs collected starting from one day ago until now.

Setting an exact start/end point¶

To choose the exact day and time for the start or end point, use the Absolute tab and select a date and time on the calendar.

To confirm your choice, click Set date.

Example shown: This selection will show logs collected starting from June 7, 2023 at 6:00 until now.

Auto refresh¶

To update the view automatically at a set time interval, choose a refresh rate:

Refresh¶

To reload the view with your changes, click the blue refresh button.

Note: Don't choose "Now" as your start point. Since the program can't show data newer than "now," it's not valid, so you'll see an error message.

Using the time selector¶

To select a more specific time period within the current timeframe, click and drag on the graph.

Filtering by field¶

In Discover, you can filter data by any field in multiple ways.

Using the field list¶

Use the search bar to find the field you want, or scroll through the list.

Isolating fields¶

To choose which fields you see in the log list, click the + symbol next to the field name. You can select multiple fields.

Seeing all occuring values in one field¶

To see a percentage breakdown of all the values from one field, click the magnifying glass next to the field name (the magnifying glass appears when you hover over the field name).

Tip: What does this mean?

This list of values from the field http.response.status_code compares how often users are getting certain http response codes. 51.4% of the time, users are getting a 404 code, meaning the webpage wasn't found. 43.3% of the time, users are getting a 200 code, which means that the request succeeded. The high percentage of "not found" response codes could inform a website administrator that one or more of their frequently clicked links are broken.

Viewing and filtering log details¶

To view the details of individual logs as a table or in JSON, click the arrow next to the timestamp. You can apply filters using the field names in the table view.

Filtering from the expanded table view¶

You can use controls in the table view to filter logs:

Filter for logs that contain the same value in the selected field (update_item in action in the example)

Filter for logs that do NOT contain the same value in the selected field (update_item in action in the example)

Show a percentage breakdown of values in this field (the same function as the magnifying glass in the fields list on the left)

Add to list of displayed fields for all visible logs (the same function as in the fields list on the left)

Query bar¶

You can filter for field (not time) using the query bar. The query bar tells you which query language to use. The query language depends on your data source. Use Lucene Query Syntax for data stored using ElasticSearch.

After you type your query, set the timeframe and click the refresh button. Your filters will be applied to the visible incoming logs.

Investigating IP addresses¶

You can investigate IP addresses using external analysis tools. You might want to do this, for example, if you see multiple suspicious logins from one IP address.

Using external IP analysis tools

1. Click on the IP address you want to analyze.

2. Click on the tool you want to use. You'll be taken to the tool's website, where you can see the results of the IP address analysis.

Dashboards¶

A dashboard is a set of charts and graphs that represent data from your system. Dashboards allow you to quickly get a sense for what's going on in your network.

Your administrator sets up dashboards based on the data sources and fields that are most useful to you. For example, you might have a dashboard that shows graphs related only to email activity, or only to login attempts. You might have many dashboards for different purposes.

You can filter the data to change which data the dashboard shows within its preset constraints.

How can dashboards help me?

By having certain data arranged into a chart, table, or graph, you can get a visual overview of activity within your system and identify trends.

In this example, you can see that a high volume of emails were sent and received on June 19th.

Navigating Dashboards¶

Opening a dashboard¶

To open a dashboard, click on its name.

Dashboard controls¶

Setting the timeframe¶

You can change the timeframe the dashboard represents. Find the time-setting guide here. To refresh the dashboard with your new timeframe, click on the refresh button.

Note: There is no auto-refresh rate in Dashboards.

Filtering dashboard data¶

To filter the data the dashboard shows, use the query bar. The query language you need to use depends on your data source. The query bar tells you which query language to use. Use Lucene Query Syntax for data stored using ElasticSearch.

The example above uses Lucene Query Syntax.

Moving widgets¶

You can reposition and resize each widget. To move widgets, click on the dashboard menu button and select Edit.

To move a widget, click anywere on the widget and drag. To resize a widget, click on the widget's bottom right corner and drag.

To save your changes, click the green save button. To cancel the changes, click the red cancel button.

Printing dashboards¶

To print a dashboard, click on the dashboard menu button and select Print. Your browser opens a window, and you can choose your print settings there.

Reports¶

Reports are printer-friendly visual representations of your data, like printable dashboards. Your administrator chooses what information goes into your reports based on your needs.

Find and print a report¶

- Select the report from your list, or use the search bar to find your report.

- Click Print. Your browser opens a print window where you can choose your print settings.

Export¶

Turn sets of logs into downloadable, sendable files in Export. You can keep these files on your computer, inspect them in another program, or send them via email.

What is an export?

An export is not a file, but a process that creates a file. The export contains and follows your instructions for which data to put in the file, what type of file to create, and what to do with the file. When you run the export, you create the file.

Why would I export logs?

Being able to see a group of logs in one file can help you inspect the data more closely. A few reasons you might want to export logs are:

- To investigate an event or attack

- To send data to an analyst

- To explore the data in a program outside TeskaLabs LogMan.io

Navigating Export¶

List of exports

The List of exports shows you all the exports that have been run.

From the list page, you can:

- See an export's details by clicking on the export's name

- Download the export by clicking on the cloud beside its name

- Delete the export by clicking on the trash can beside its name

- Search for exports using the search bar

Export status is color-coded:

- Green: Completed

- Yellow: In progress

- Blue: Scheduled

- Red: Failed

Jump to:¶

Run an export¶

Running an export adds the export to your List of exports, but it does not automatically download the export. See Download an export for instructions.

Run an export based on a preset¶

1. Click New on the List of exports page. Now you can see the preset exports:

2. To run a preset export, click the run button beside the export name.

OR

2. To edit the export before running, click on the edit button beside the export name. Make your changes, and then click Start. (Use this guide to learn about making changes.)

Once you run the export, you are automatically brought back to the list of exports, and your export appears at the top of the list.

Note

Presets are created by administrators.

Run an export based on an export you've run before¶

You can re-run an export. Running an export again does not overwrite the original export.

1. On the List of exports page, click on the name of the export you want to run again.

2. Click Restart.

3. You can make changes here (see this guide) or run as-is.

4. Click Start.

Once you run the export, you are automatically brought back to the list of exports, and your new export appears at the top of the list.

Create a new export¶

Create an export from a blank form¶

1. In List of exports, click New, then click Custom.

2. Fill in the fields.

Note

The options in the drop down menus might change based on the selections you make.

Name

Name the export.

Data Source

Select your data source from the drop-down list.

Output

Choose the file type for your data. It can be:

- Raw: If you want to download the export and import the logs into different software, choose raw. If the data source is Elasticsearch, the raw file format is .json.

- .csv: Comma-separated values

- .xlsx: Microsoft Excel format

Compression

Choose to zip your export file, or leave it uncompressed. A zipped file is compressed, and therefore smaller, so it's easier to send, and it takes up less space in your computer.

Target

Choose the target for your file. It can be:

- Download: A file you can download to your computer.

- Email: Fill in the email fields. When you run the export, the email sends. You can still download the data file any time in the List of exports.

- Jupyter: Saves the file in the Jupyter notebook, which you can access through the Tools page. You need to have administrator permissions to access the Jupyter notebook, so only choose Jupyter as the target if you're an administrator.

Separator

If you select .csv as your output, choose what character will mark the separation between each value in each log. Even though CSV means comma-separated values, you can choose to use a different separator, such as a semicolon or space.

Schedule (optional)¶

To schedule the export, rather than running it immediately, click Add schedule.

-

Schedule once:

- To run the export one time at a future time, type the desired date and time in

YYYY-MM-DD HH:mmformat, for example2023-12-31 23:59(December 31st, 2023, at 23:59).

- To run the export one time at a future time, type the desired date and time in

-

Schedule a recurring export:

-

To set up the export to run automatically on a regular schedule, use

cronsyntax. You can learn more aboutcronfrom Wikipedia, and use this tool and these examples by Cronitor to help you writecronexpressions. -

The Schedule field also supports random

Rusage and Vixie cron-style@keyword expressions.

-

Query

Type a query to filter for certain data. The query determines which data to export, including the timeframe of the logs.

Warning

You must include a query in every export. If you run an export without a query, all of the data stored in your program will be exported with no filter for time or content. This could create an extremely large file and put strain on data storage components, and the file likely won't be useful to you or to analysts.

If you accidentally run an export without a query, you can delete the export while it's still running in the List of exports by clicking on the trash can button.

TeskaLabs LogMan.io uses the Elasticsearch Query DSL (Domain Specific Language).

Here's the full guide to the Elasticsearch Query DSL.

Example of a query:

{

"bool": {

"filter": [

{

"range": {

"@timestamp": {

"gte": "now-1d/d",

"lt": "now/d"

}

}

},

{

"prefix": {

"event.dataset": {

"value": "microsoft-office-365"

}

}

}

]

}

}

Query breakdown:

bool: This tells us that the whole query is a Boolean query, which combines mutliple conditions such as "must," "should," and "must not" Here, it's using filter to find characteristics the data must have to make it into the export. filter can have mutliple conditions.

range is the first filter condition. Since it refers to the field below it, which is @timestamp, it will filter for logs based on a range of values in the timestamp field.

@timestamp tells us that the query is filtering for time, so it will export logs from a certain timeframe.

gte: This means "greater than or equal to," which is set to the value now-1d/d, meaning the earliest timestamp (the first log) will be from exactly one day ago at the moment you run the export.

lt means "less than," and it is set to now/d, so the latest timestamp (the last log) will be the newest at the moment you run the export ("now").

prefix is the second filter condition. It looks for logs where the value of a field, in this case event.dataset, starts with microsoft-office-365.

So, what does this query mean?

This export will show all logs from Microsoft Office 365 from the last 24 hours.

3. Add columns

For .csv and .xlsx files, you need to specify what columns you want to have in your document. Each column represents a data field. If you don't specify any columns, the resulting table will have all possible columns, so the table might be much bigger than you expect or need it to be.

You can see the list of all available data fields in Discover. To find which fields are relevant to the logs you're exporting, inspect an individual log in Discover.

- To add a column, click Add. Type the name of the column.

- To delete a column, click -.

- To reorder the columns, click and drag the arrows.

Warning

Pressing enter after typing a column name will run the export.

This example was downloaded from the export shown above as a .csv file, then separated into columns using the Microsoft Excel Convert Text to Columns Wizard. You can see that the columns here match the columns specified in the export.

4. Run the export by pressing Start.

Once you run the export, you are automatically brought back to the list of exports, and your export appears at the top of the list.

Download an export¶

1. On the List of exports page, click on the cloud button to download.

OR

1. On the List of exports page, click on the export's name.

2. Click Download.

Your browser should automatcially start a download.

Delete an export¶

1. On the List of exports page, click on the trash can button.

OR

1. On the List of exports page, click on the export's name.

2. Click Remove.

The export should disappear from your list.

Add an export to your library¶

Note

This feature is only available to administrators.

If you like an export you've created or edited, you can save it to your library as a preset for future use.

1. On the List of exports page, click on the export's name.

2. Click Save to Library.

When you click on New from the List of exports page, your new export preset should be in the list.

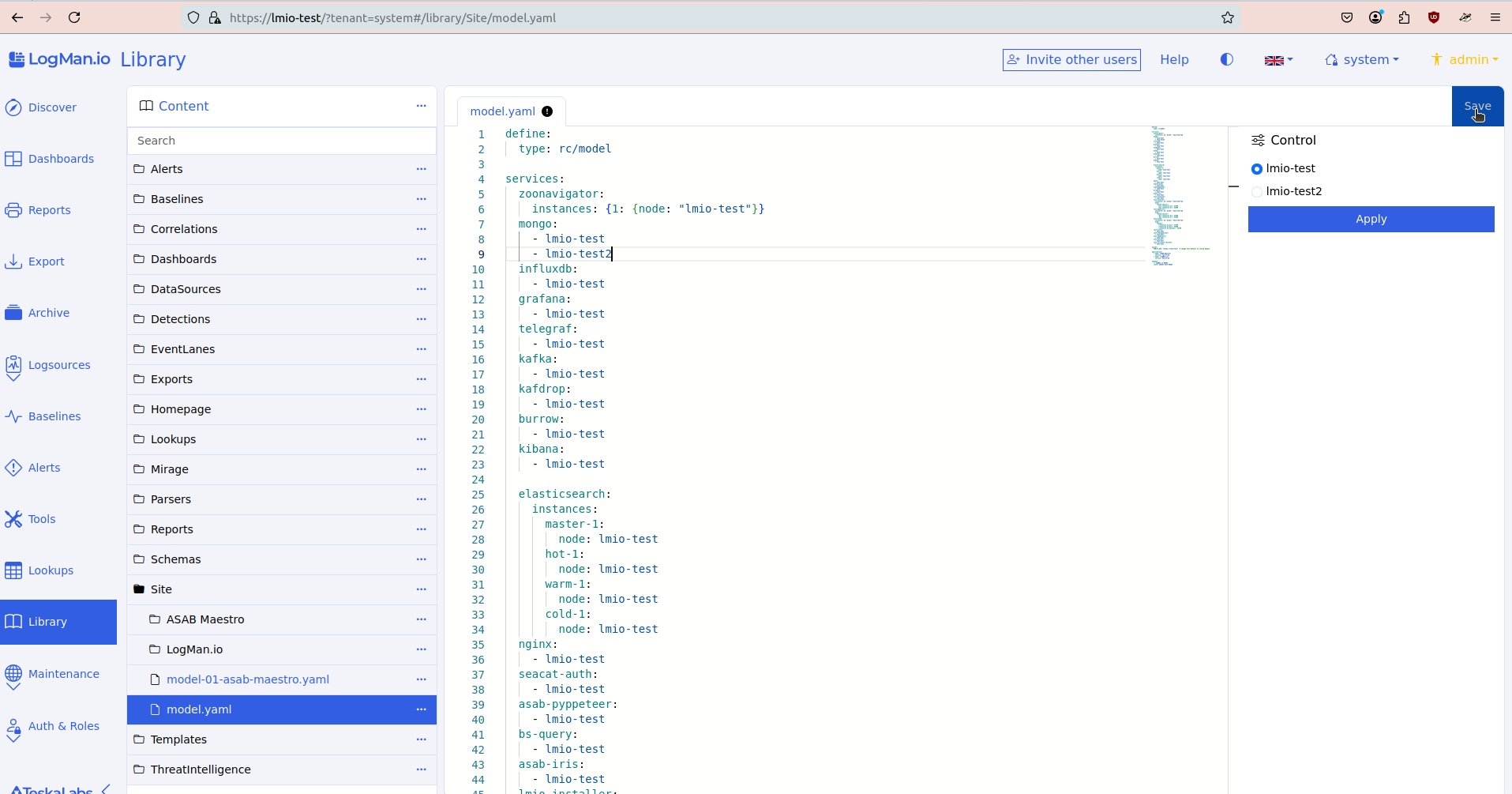

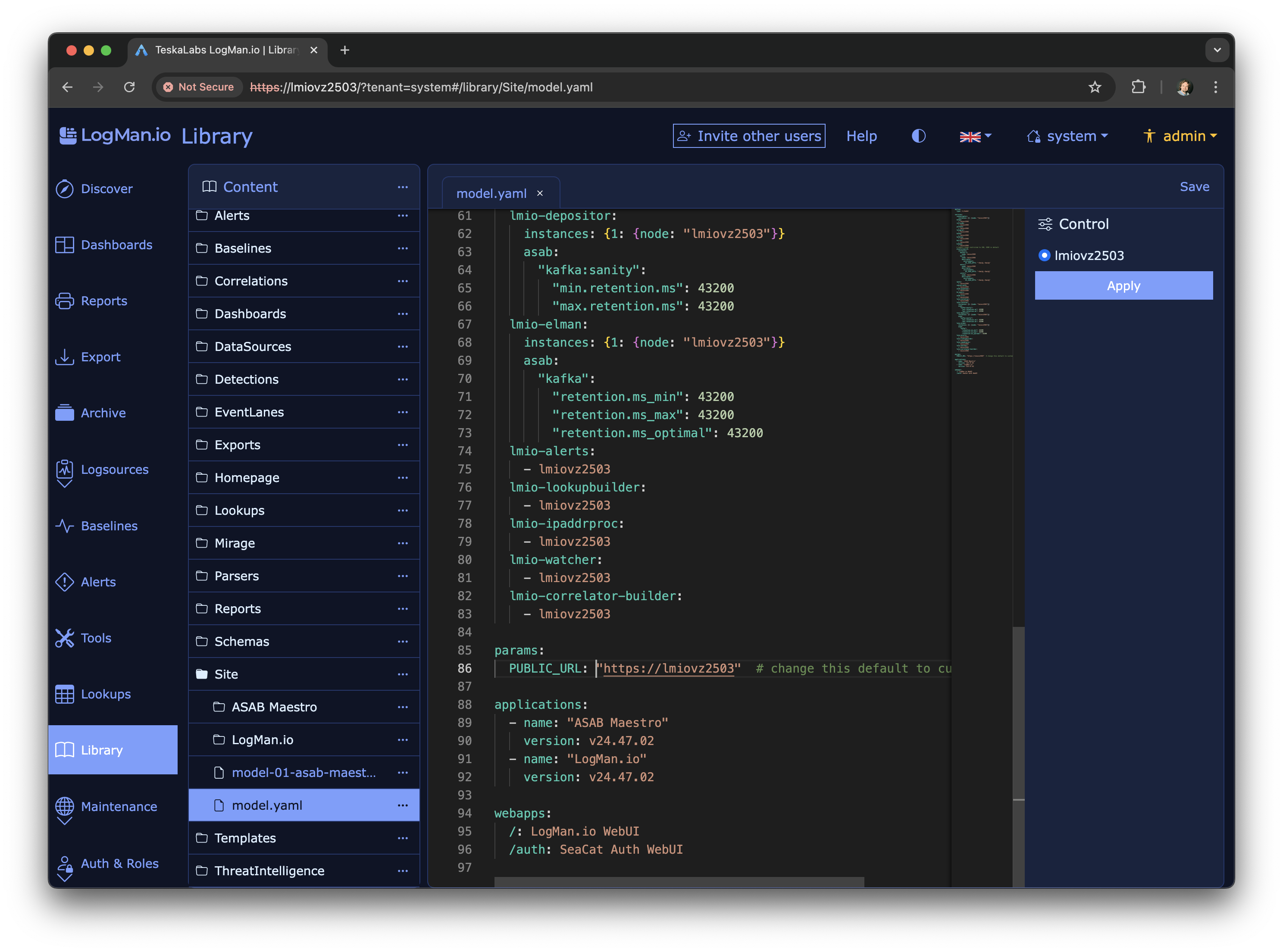

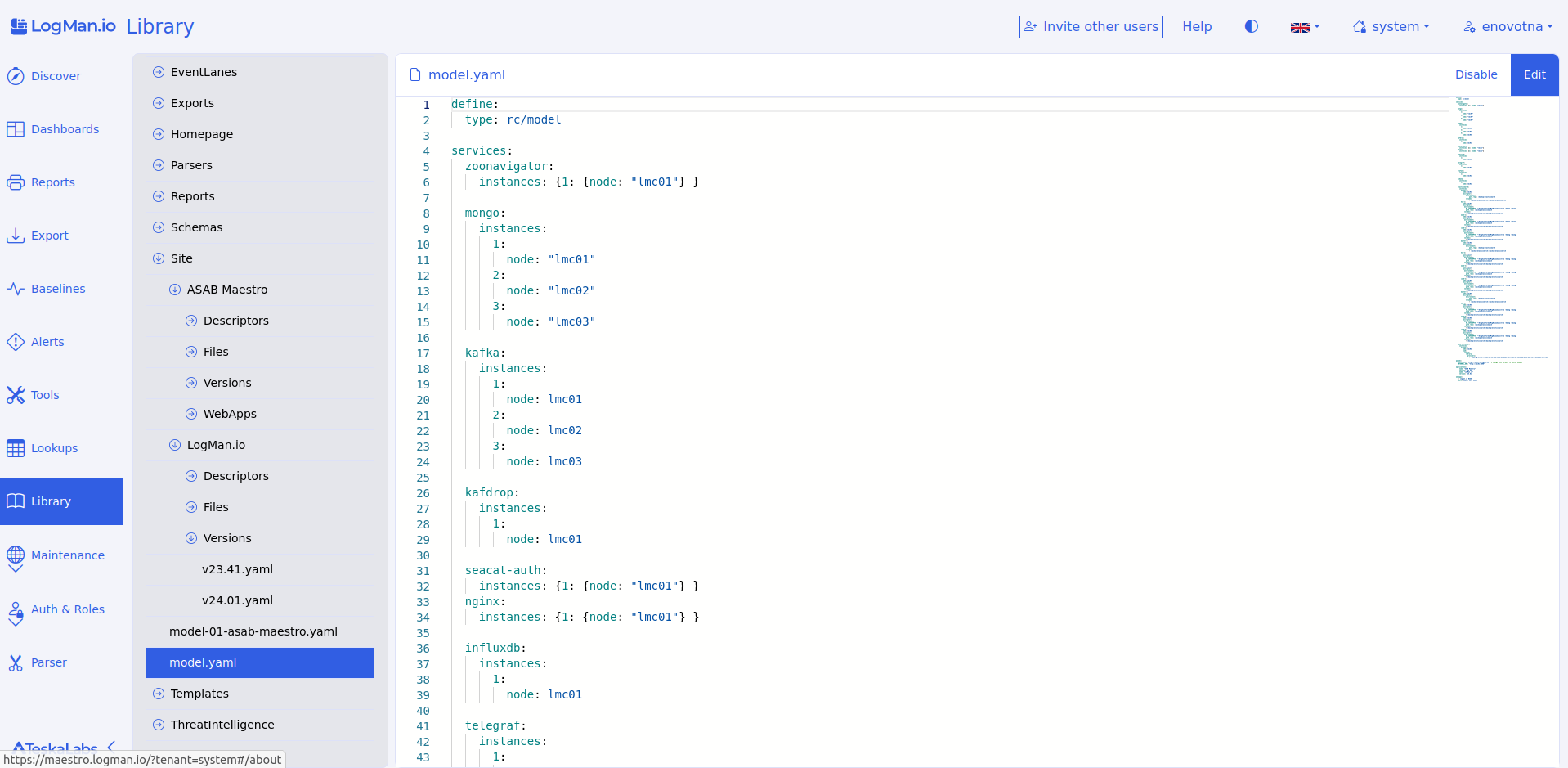

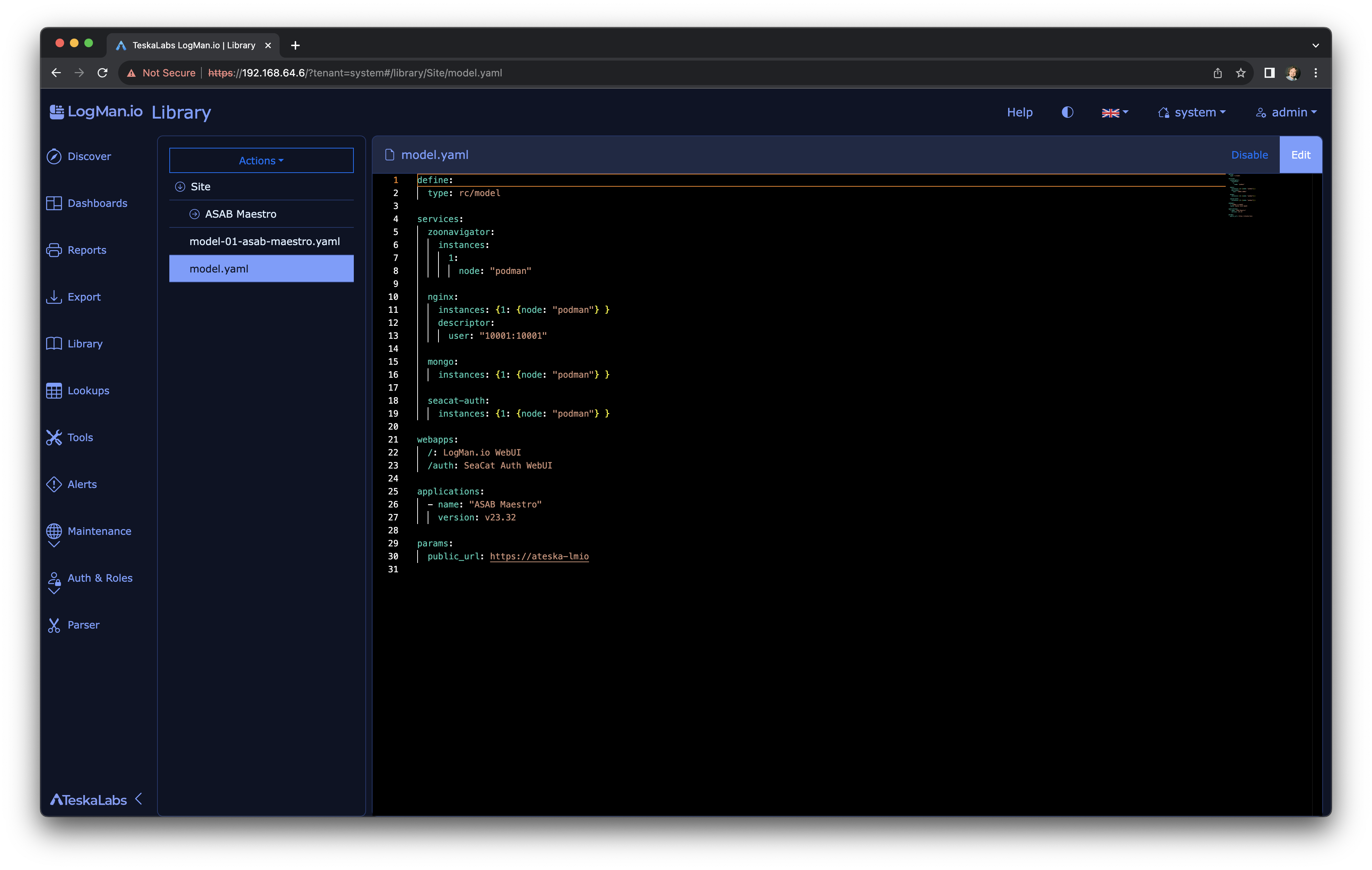

Library¶

Administrator feature

The Library is an administrator feature. The Library has a significant impact on the way TeskaLabs LogMan.io works. Some users don't have access to the Library.

The Library holds items (files) that determine what you see when using TeskaLabs LogMan.io. The items in the Library determine, for example, your homepage, dashboards, reports, exports, and some SIEM functions.

When you recieve TeskaLabs LogMan.io, the Library is already filled with files. You can change these according to your needs.

The Library supports these file types:

- .html

- .json

- .md

- .txt

- .yaml

- .yml

Warning

Changing items in the Library impacts how TeskaLabs LogMan.io and TeskaLabs SIEM work. If you are unsure about making changes in the Library, contact Support.

Navigating the Library¶

Some items have additional options in the upper right corner of the screen:

Locating items¶

To find an item, use the search bar, or click through the folders.

If you navigate to a folder in the Library and want to return to the search bar, click Library again.

Adding items to the Library¶

Warning

Do NOT attempt to add single items to the library with the Restore function. Restore is only for importing a whole library.

Creating items in a folder¶

You can create an item directly in certain folders. If adding an item is possible, you'll see a Create new item in (folder) button when you click on the folder.

- To add an item, click Create new item in (folder).

- Name the item, select the file extension from the dropdown, and click Create.

- If the item doesn't appear immediately, refresh the page, and your item should appear in the library.

Adding an item by duplicating an existing item¶

- Click on the item you want to duplicate.

- Click on the ... button near the top.

- Click Copy.

- Rename the item, choose the file extension from the dropdown, and click Copy.

- If the item doesn't appear immediately, refresh the page, and your item should appear in the library.

Editing an item in the Library¶

- Click on the item you want to edit.

- To edit the item, click Edit.

- To save your changes, click Save, or exit the editor without saving by clicking Cancel.

- If your edits don't display immediately, refresh the page, and your changes should be saved.

Removing an item from the Library¶

- Click on the item you want to remove.

- Click on the ... button near the top.

- Click Remove and confirm Yes if your browser prompts.

- If if the item doesn't disappear immediately, refresh the page, and the removed item should be gone.

Disabling items¶

You can temporarily disable an item. It stays in your library, but its effect on your system is paused.

To disable an item, click on the item and click Disable.

You can re-enable the file any time by clicking Enable.

Note

You can't read the contents of an item while it's disabled.

Backing up the Library¶

You can back up your whole Library onto your computer or other external storage by exporting the Library.

To export and download the contents of the Library, click Actions, then click Backup. Your browswer will start the download.

Restoring the library from backup¶

Warning

Using Restore means importing a whole library from your computer. Restore is intended to restore your library from a backup version, so it will overwrite (delete) the existing contents of your Library in TeskaLabs LogMan.io. ONLY restore the Library if you intend to replace the entire contents of the Library with the files you're importing.

Restoring¶

- Click Actions.

- Click Restore.

- Choose the file from your computer. You can only import tar.gz files.

- Click Import.

Remember, using Restore and Import overwrites your whole library.

Lookups¶

Administrator feature

Lookups are an administrator feature. Some users don't have access to Lookups.

You can use lookups to get and store additional information from external sources. The additional information enhances your data and adds relevant context. This makes your data more valuable because you can analyze the data more deeply. For example, you can store user names, active users, active VPNs, and suspicious IP addresses.

Tip

You can read more about Lookups here in the Reference guide.

Navigating Lookups¶

Creating a new lookup¶

To create a new lookup:

- Click Create lookup.

- Fill in the fields: Name, Short description, Detail description, and Key(s).

- To add another key, click on the +.

- Choose to add or not add an expiration.

- Click Save.

Finding a lookup¶

Use the search bar to find a specific lookup. Using the search bar does not search the contents of the lookups, only the lookup names. To view all the lookups again after using the search bar, clear the search bar and press Enter or Return.

Viewing and editing a lookup's details¶

Viewing a lookup's keys/items¶

To see a lookup's keys and values, or items, click on the ... button, and click Items.

Editing a lookup's keys/items¶

From the List of lookups, click on the ... button and click Items. This takes you to the individual lookup's page.

Adding: To add an item, click Add item.

Editing: To edit an existing item, click the ... button on the item line, and click Edit.

Deleting: To delete the item, click the ... button on the item line, and click Delete.

Remember to click Save after making changes.

Viewing a lookup's description¶

To see the detailed description of a lookup, click on the ... button on the List of lookups page, and click Info.

Editing a lookup's description¶

- Click on the ... button on the List of lookups page, and click Info. This takes you to the lookup's info page.

- Click Edit lookup at the bottom.

- After making changes, click Save, or click Cancel to exit editing mode.

Deleting a lookup¶

To delete a lookup:

-

Click on the ... button on the List of lookups page, and click Info. This takes you to the lookup's info page.

-

Click Delete lookup.

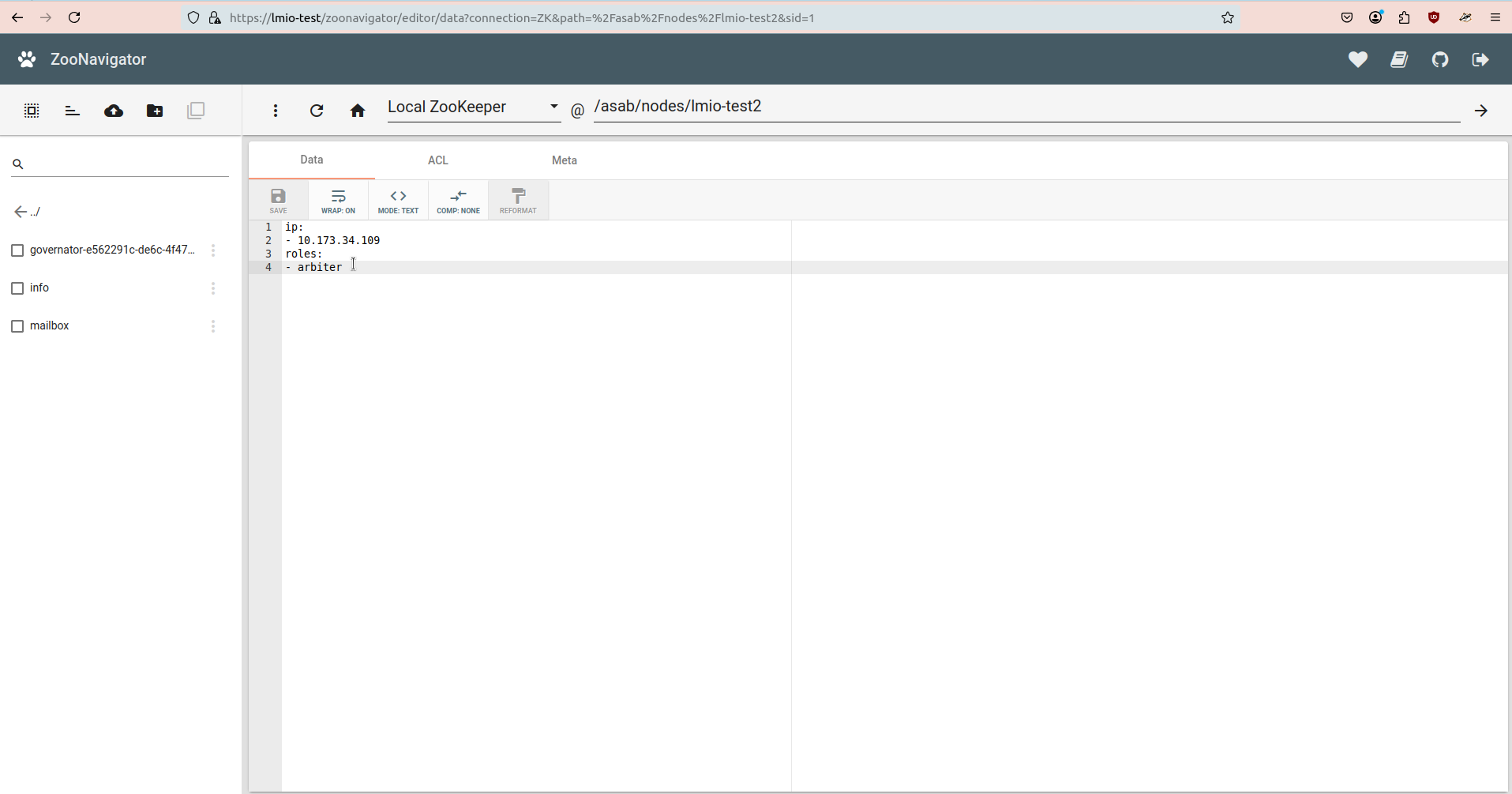

Tools¶

Administrator feature

Tools are an administrator feature. Changes you make when visiting external tools can have a significant impact on the way TeskaLabs LogMan.io works. Some users don't have access to the Tools page.

The Tools page gives you quick access to external programs that interact with or can be used alongside TeskaLabs LogMan.io.

Using external tools¶

To automatically log in securely to a tool, click on the tool's icon.

Warnings

- While tenants' data is separated in the TeskaLabs LogMan.io UI, tenants' data is not separated within these tools.

- Changes you make in Zookeeper, Kafka, and Kibana could damage your deployment of TeskaLabs LogMan.io.

Maintenance¶

Administrator feature

Maintenance is an administrator feature. What you do in Maintenance has a significant impact on the way TeskaLabs LogMan.io works. Some users don't have access to Maintenance.

The Maintenance section includes Configuration and Services.

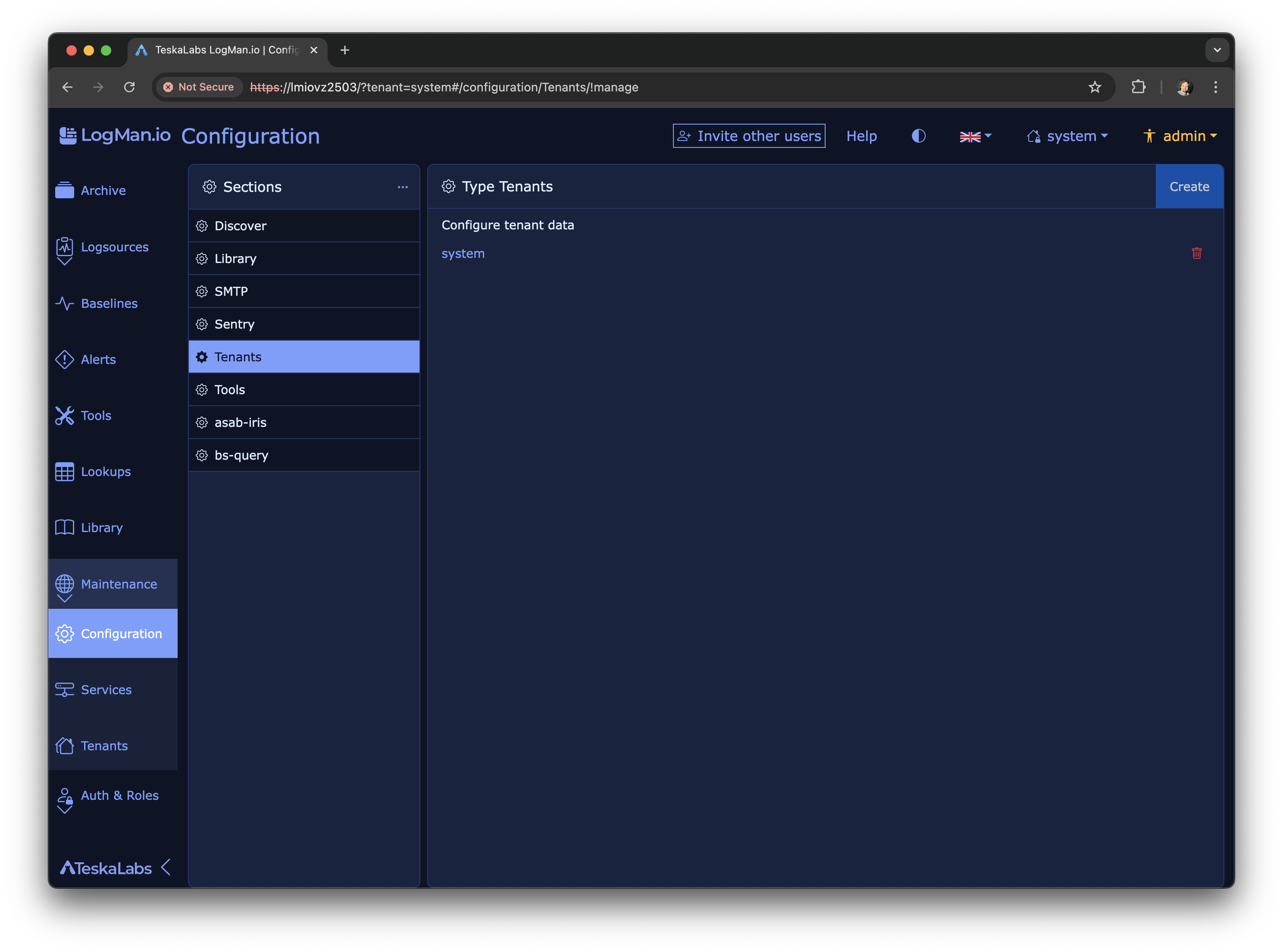

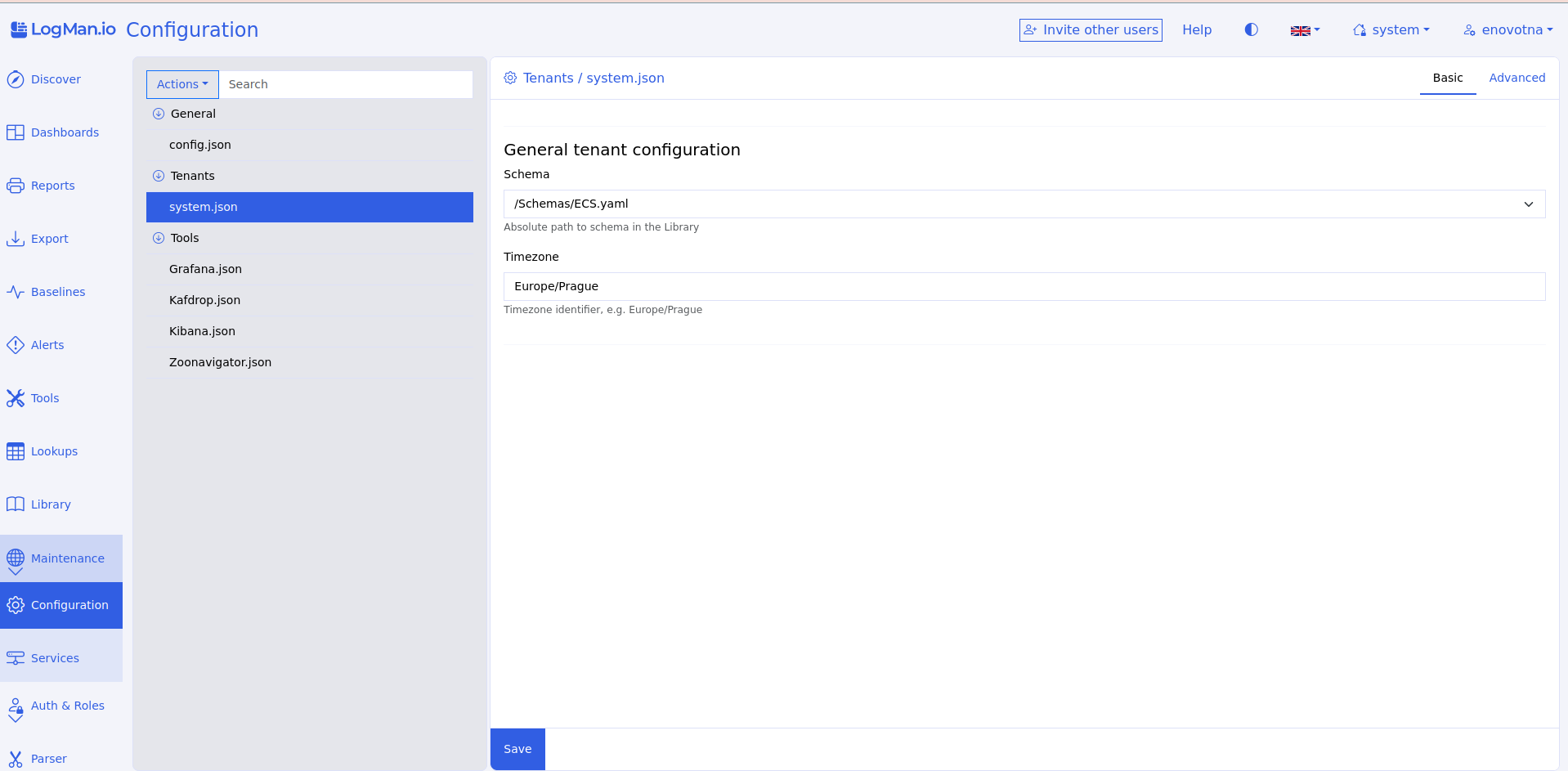

Configuration¶

Configuration holds JSON files that determine some of the components you can see and use in TeskaLabs LogMan.io. For example, Configuration includes:

- The Discover page

- The sidebar

- Tenants

- The Tools page

Warning

Configuration files have a significant impact on the way TeskaLabs LogMan.io works. If you need help with your UI configuration, contact Support.

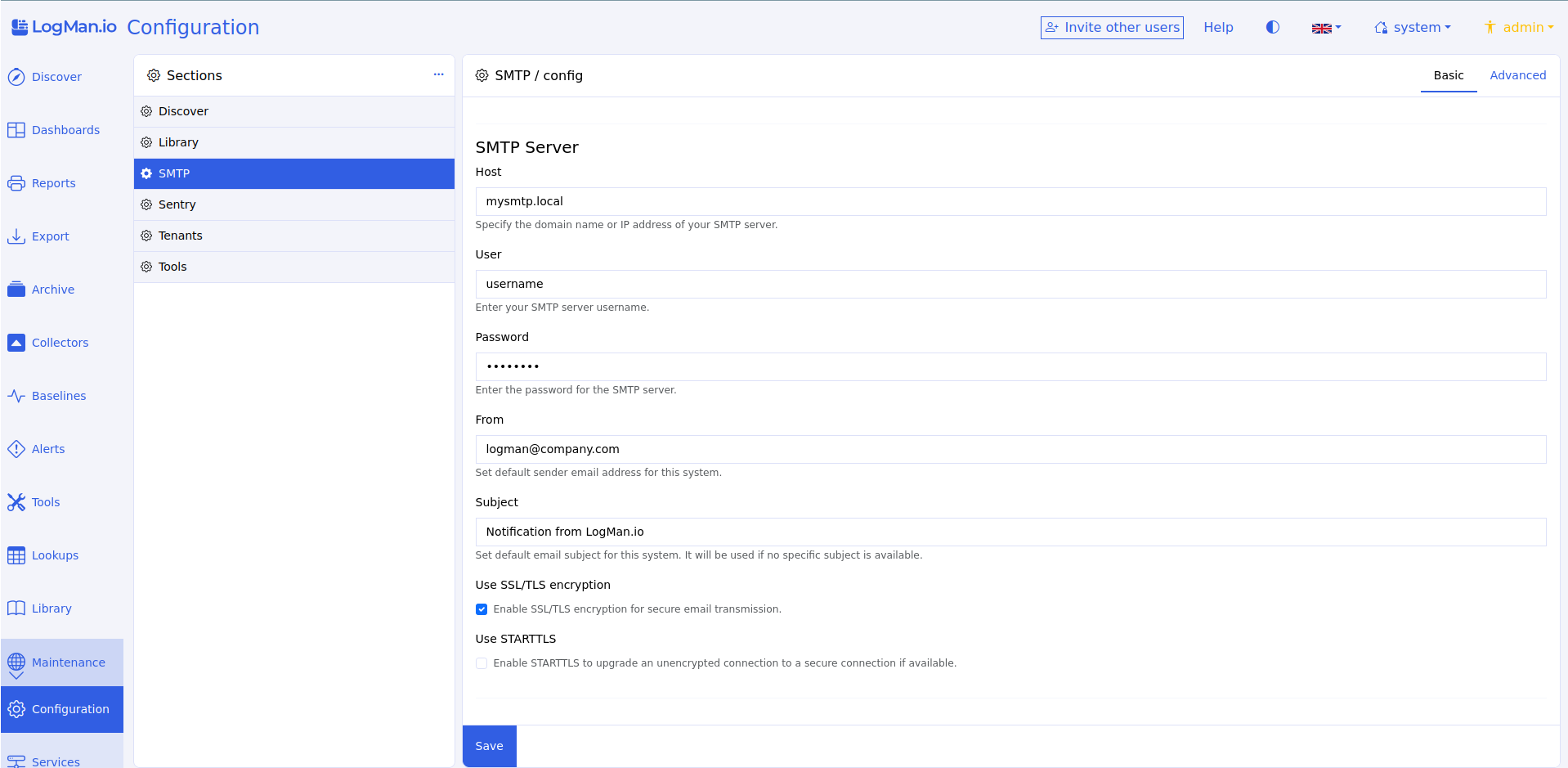

Basic and Advanced modes¶

You can switch between Basic and Advanced mode for configuration files.

Basic has fillable fields. Advanced shows the file in JSON. To choose a mode, click Basic or Advanced in the upper right corner.

Editing a configuration file¶

To edit a configuration file, click on the file name, choose your preferred mode, and make the changes. The file is always editable - you don't have to click anything to begin editing. Remember to click Save when you're finished.

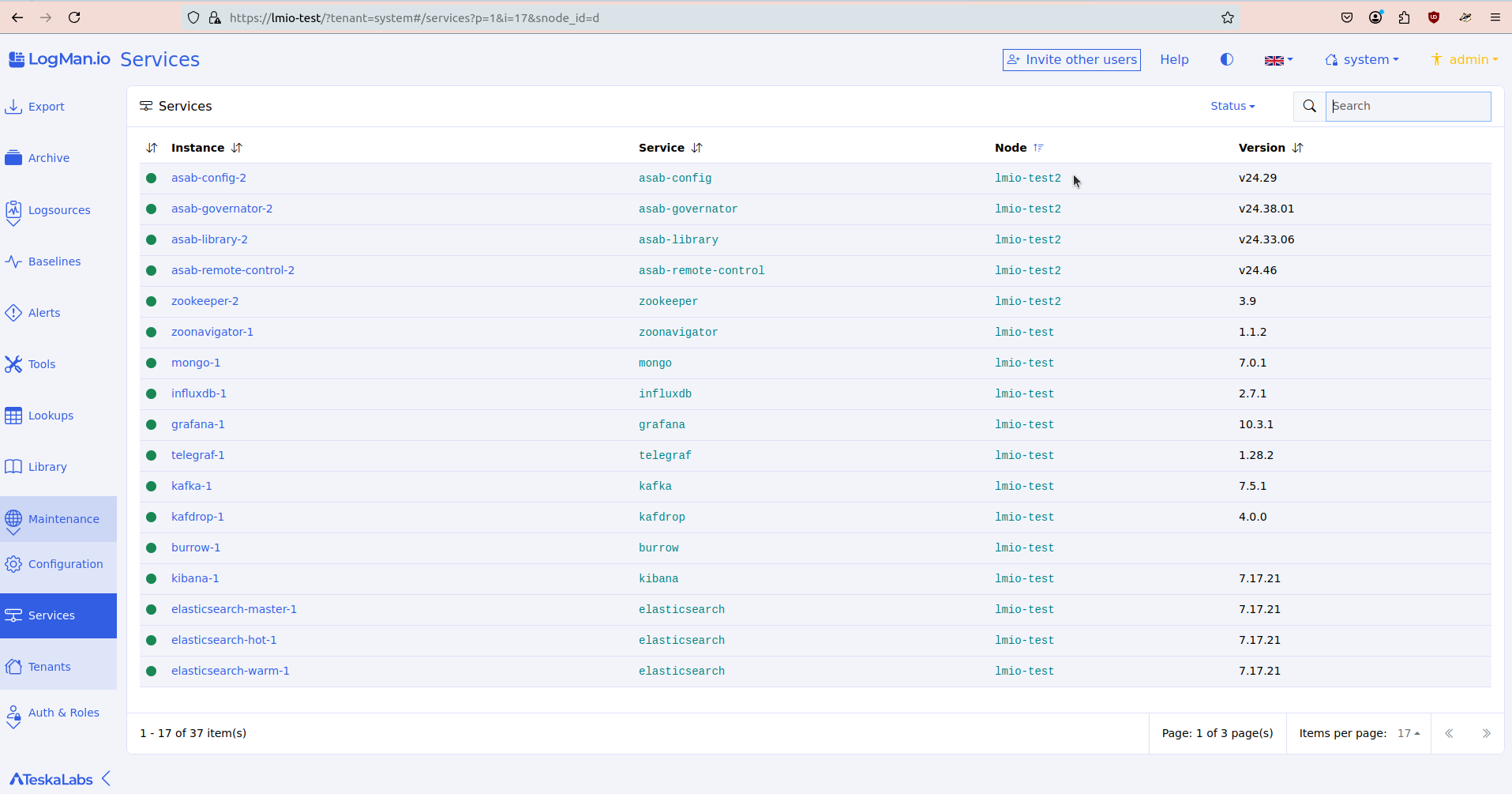

Services¶

Services shows you all of the services and microservices ("mini programs") that make up the infrastructure of TeskaLabs LogMan.io.

Warning

Since TeskaLabs LogMan.io is made of microservices, interfering with the microservices could have a significant impact on the performance of the program. If you need help with microservices, contact Support.

Viewing service details¶

To view a service's details, click the arrow to the left of the service name.

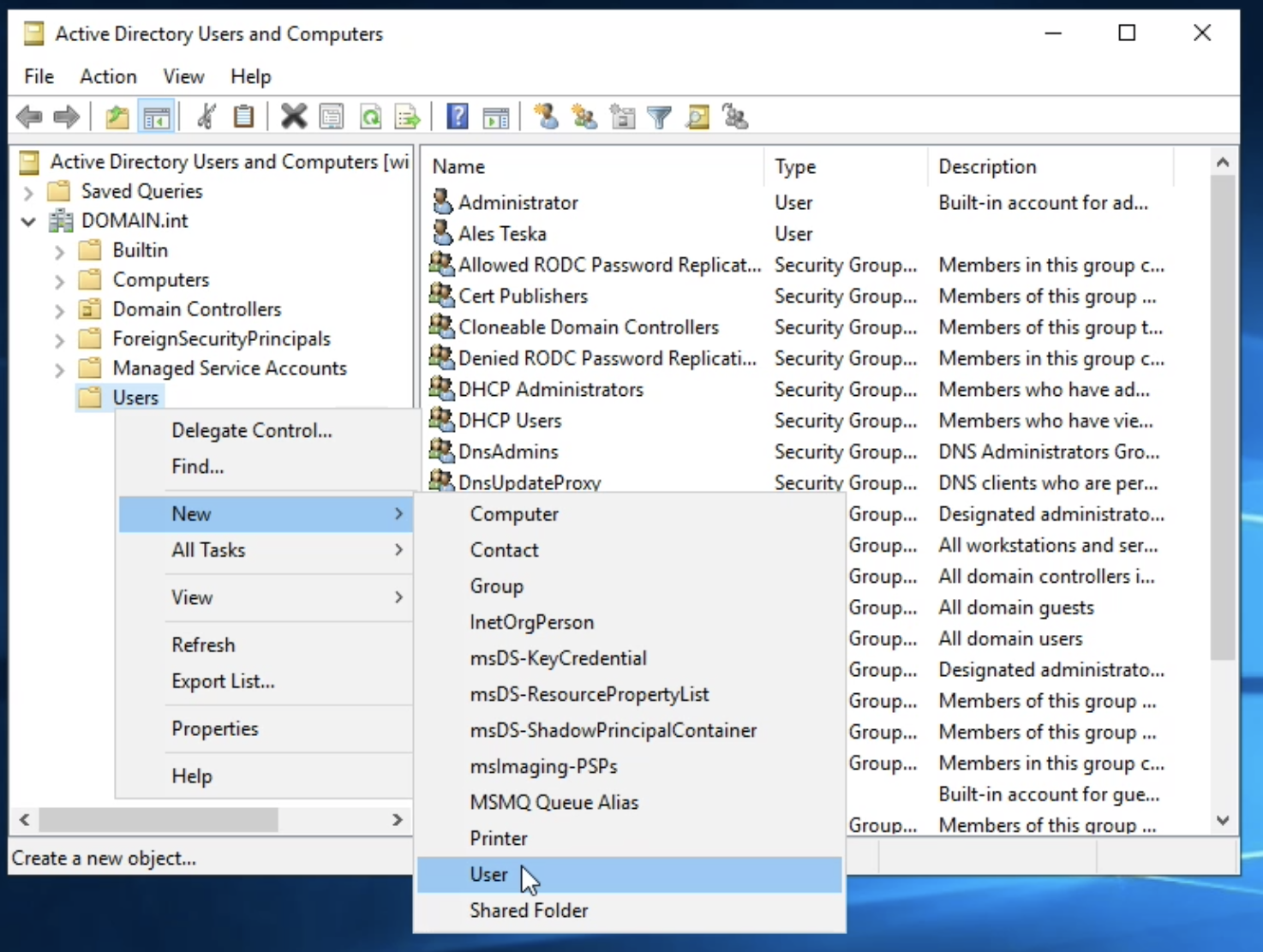

Auth: Controlling user access¶

Administrator feature

Auth is an administrator feature. It has a significant impact on the people using TeskaLabs LogMan.io. Some users don't have access to the Auth pages.

The Auth (authorization) section includes all the controls administrators need to manage users and tenants.

Credentials¶

Credentials are users. From the Credentials screen, you can see:

- Name: The username that someone uses to log in

- Tenants: The tenants this user has access to

- Roles: The set of permissions this user has (see Roles)

Creating new credentials¶

1. To create a new user, click Create new credentials.

2. In the Create tab, enter a username. If you want to send the person an email inviting them to reset their password, enter their email address and check Send instructions to set password.

3. Click Create credentials.

The new credentials appear in the Credentials list. If you checked Send instructions to set password, the new user should recieve an email.

Editing credentials¶

To edit a credential, click on a username, and click Edit in the section you want to change. Remember to click Save to save your changes, or click Cancel to exit the editor.

Tenants¶

A tenant is one entity collecting data from a group of sources. Each tenant has an isolated space to collect and manage its data. (Every tenant's data is completely separated from all other tenants' data in the UI.) One deployment of TeskaLabs LogMan.io can handle many tenants (mutlitenancy).

As a user, your company might be just one tenant, or you might have different tenants for different departments. If you're a distributor, each of your clients has at least one tenant.

One tenant can be accessible by multiple users, and users can have access to multiple tenants. You can control which users can access which tenants by assigning credentials to tentants or vice-versa.

Resources¶

Resources are the most basic unit of authorization. They are single and specific access permissions.

Examples:

- Being able to access dashboards from a certain data source

- Being able to delete tenants

- Being able to make changes in the Library

Roles¶

A role is a container for resources. You can create a role to include any combination of resources, so a role is a set of permissions.

Clients¶

Clients are additonal applications that are accessing TeskaLabs LogMan.io to support its functioning.

Warning

Removing a client could interrupt essential program functions.

Sessions¶

Sessions are active login periods currently running.

Ways to end a session:

- Click on the red X on the session's line on the Sessions page.

- Click on the session's name, then click Terminate session

- To terminate all sessions (logging all users out), click Terminate all on the Sessions page.

Tip

The Auth module uses TeskaLabs SeaCat Auth. To learn more, you can read its documentation or take a look at its repository on GitHub.

Data tables¶

Several LogMan.io features use data tables to display lists. We are working continuously to extend the capabilities of data tables across the product.

Feature pages that use data tables include Exports, Baselines, Alerts, Lookups, Services maintenance, Collectors, and Auth modules such as Credentials, Tenants, etc.

Sorting¶

Sorting by a single column¶

You can sort data by any column with a sorting icon beside the column name.

To sort alphanumerically by a column, click the two-arrow icon beside the column name.

An arrow pointing down indicates sorting in alphanumeric order, descending.

An arrow pointing up indicates that the data is sorted in alphanumeric order, ascending.

Sorting by multiple columns¶

To sort alphanumerically by multiple columns, hold shift while you click on the sort icons.

Items per page¶

To change the number of items displayed per page:

- Click the number beside Items per page near the bottom of the screen.

- Select the number. The page automatically reloads.

Alternatively, you can customize the number of items per page using the URL.

The URL includes i= followed by a number.

To change the number of itmes per page, change that number, and press enter or return.

For exmaple, i=7 displays 7 items per page.

Specialized filtering¶

Some features, such as Alerts, allow for specialized filtering. Here, for example, you can filter for specific values from the fields Type, Severity, and Status.

Analyst Manual

Analyst Manual¶

The Analyst Manual

Cybersecurity and data analysts use the Analyst Manual to:

- Query data

- Create cybersecurity detections

- Create data visualizations

- Use and create other analytical tools

To learn how to use the TeskaLabs LogMan.io web app, visit the User Manual. For information about setup and installation, see the Administration Manual and the Reference guide.

Quick start¶

- Queries: Writing queries to find and filter data

- Dashboards: Designing visualizations for data summaries and patterns

- Detections: Creating custom detections for activity and patterns

- Notifications: Sending messages via email from detections or alerts

Using Lucene Query Syntax¶

If you're storing data in Elasticsearch, you need to use Lucene Query Syntax to query data in TeskaLabs LogMan.io.

These are some quick tips for using Lucene Query Syntax, but you can also see the full documentation on the Elasticsearch website, or visit this tutorial.

You might use Lucene Query Syntax when creating dashboards, filtering data in dashboards, and when searching for logs in Discover.

Basic query expressions¶

Search for the field message with any value:

message:*

Search for the value delivered in the field message:

message:delivered

Search for the phrase not delivered in the field message:

message:"not delivered"

Search for any value in the field message, but NOT the value delivered:

message:* -message:delivered

Search for the text delivered anywhere in the value in the field message:

message:delivered*

message:delivered

message:not delivered

message:delivered with delay

Note

This query would not return the same results if the specified text (delivered in this example) was only part of a word or number, not separated by spaces or periods. Therefore, the query message:eliv, for example, would not return these results.

Search for the range of values 1 to 1000 in the field user.id:

user.id:[1 TO 1000]

Search for the open range of values 1 and higher in the field user.id:

user.id:[1 TO *]

Combining query expressions¶

Use boolean operators to combine expressions:

AND - combines criteria

OR - at least one of the criteria must be met

Using parentheses

Use parentheses when multiple items need to be grouped together to form an expression.

Examples of grouped expressions:

Search for logs from the dataset security, either with an IP address containing 123.456 and a message of failed login, or with an event action as deny and a delay greater than 10:

event.dataset:security AND (ip.address:123.456* AND message:"failed login") OR

(event.action:deny AND delay:[10 TO *])

Search a library's database for a book written by either Karel Čapek or Lucie Lukačovičová that has been translated to English, or a book in English that is at least 300 pages and in the genre science fiction:

language:English AND (author:"Karel Čapek" OR author:"Lucie Lukačovičová") OR

(page.count:[300 TO *] AND genre:"science fiction")

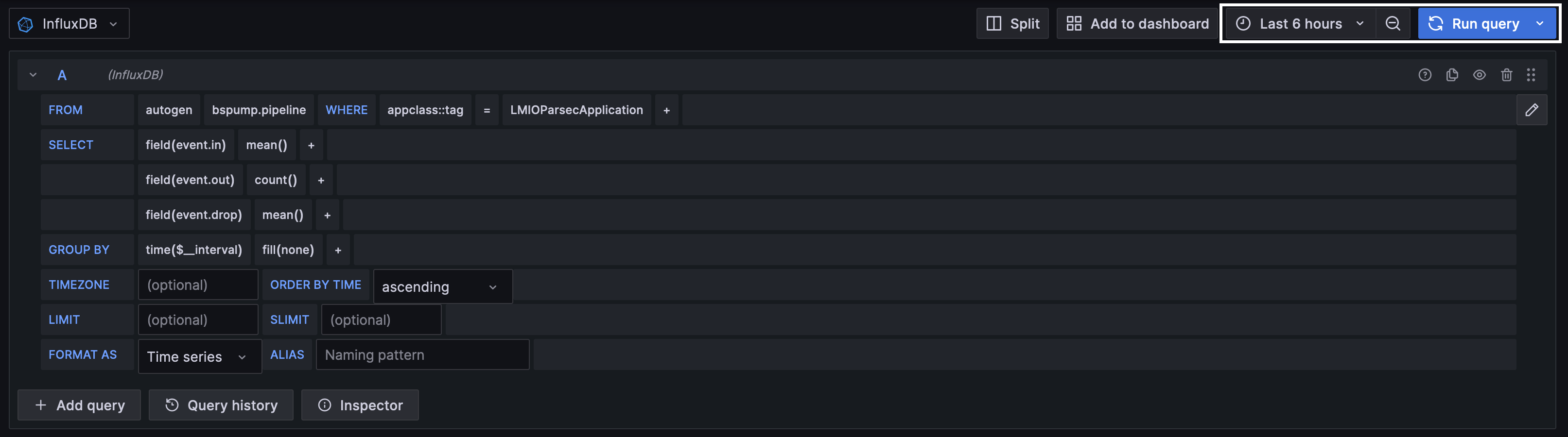

Dashboards¶

Dashboards are visualizations of incoming log data. While TeskaLabs LogMan.io comes with a library of preset dashboards, you can also create your own. View preset dashboards in the LogMan.io web app in Dashboards.

In order to create a dashboard, you need to write or copy a dashboard file in the Library.

Creating a dashboard file¶

Write dashboards in JSON.

Creating a blank dashboard

- In TeskaLabs LogMan.io, go to the Library.

- Click Dashboards.

- Click Create new item in Dashboards.

- Name the item, and click Create. If the new item doesn't appear immediately, refresh the page.

Copying an existing dashboard

- In TeskaLabs LogMan.io, go to the Library.

- Click Dashboards.

- Click on the item you want to duplicate, then click the icon near the top. Click Copy.

- Choose a new name for the item, and click Copy. If the new item doesn't appear immediately, refresh the page.

Dashboard structure¶

Write dashboards in JSON, and be aware that they're case-sensitive.

Dashboards have two parts:

- The dashboard base: A query bar, time selector, refresh button, and options button

- Widgets: The visualizations (chart, graph, list, etc.)

Dashboard base

Include this section exactly as-is to include the query bar, time selector, refresh button, and options.

{

"Prompts": {

"dateRangePicker": true,

"filterInput": true,

"submitButton": true

Widgets¶

Widgets are made of datasource and widget pairs. When you write a widget, need to include both a datasource section and a widget section.

JSON formatting tips:

- Separate every

datasourceandwidgetsection by a brace and a comma},except for the final widget in the dashboard, which does not need a comma (see the full example) - End every line with a comma

,except the final item in a section

Widget positioning

Each widget has layout lines, which dictate the size and position of the widget. If you don't include layout lines when you write the widget, the dashboard generates them automatically.

- Include the layout lines with the suggested values from each widget template, OR don't include any layout lines. (If you don't include any layout lines, make sure the final item in each section does NOT end with a comma.)

- Go to Dashboards in LogMan.io and resize and move the widget.

- When you move the widget on the Dashboards page, the dashboard file in the Library automatically generates or adjusts the layout lines accordingly. If you're working in the dashboard file in the Library and repositioning the widgets in Dashboards at the same time, make sure to save and refresh both pages after making changes on either page.

The order of widgets in your dashboard file does not determine widget position, and the order does not change if you reposition the widgets in Dashboards.

Naming

We recommend agreeing on naming conventions for dashboards and widgets within your organization to avoid confusion.

matchPhrase filter

For Elasticsearch data sources, use Lucene query syntax for the matchPhrase value.

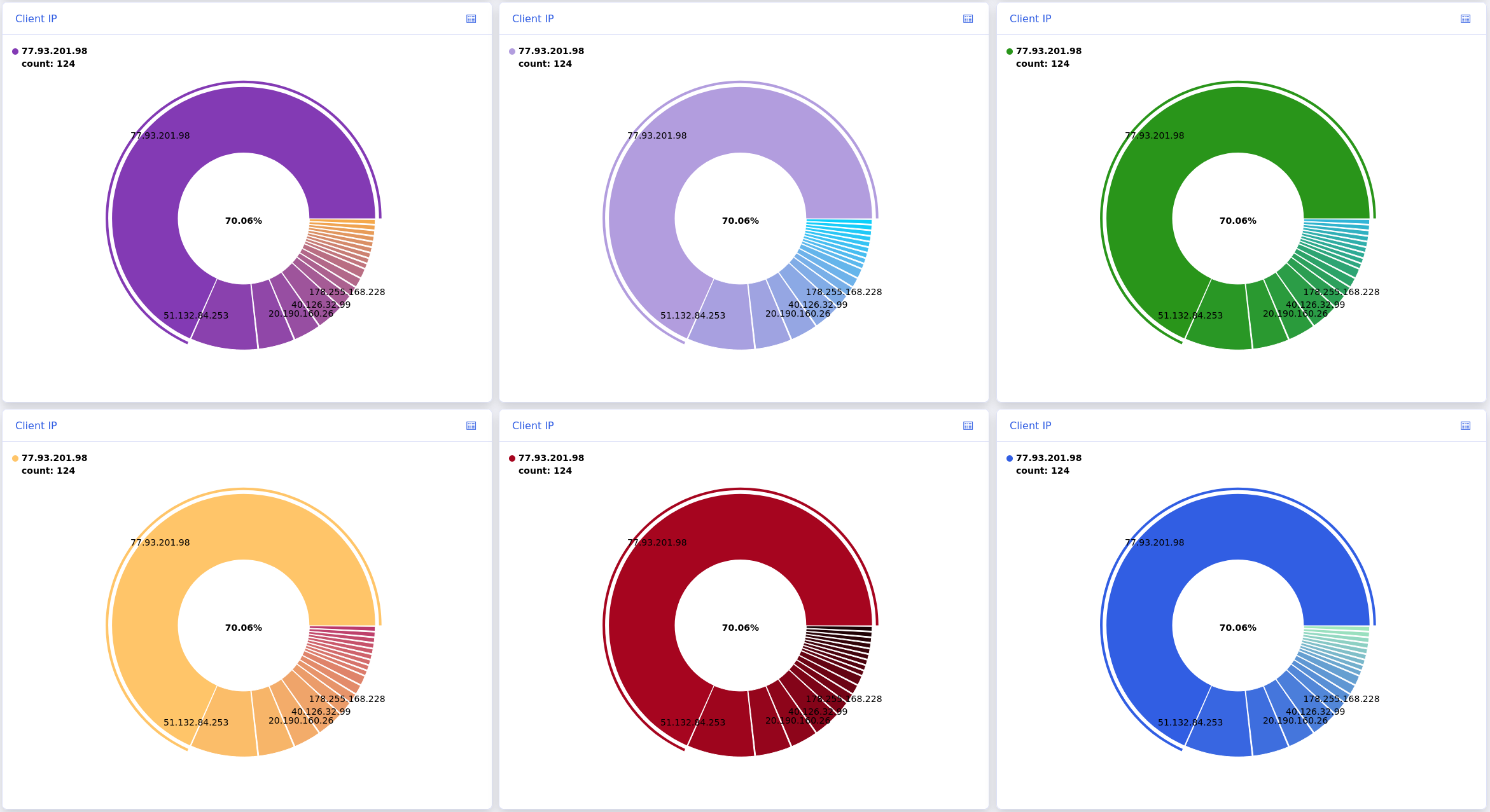

Colors

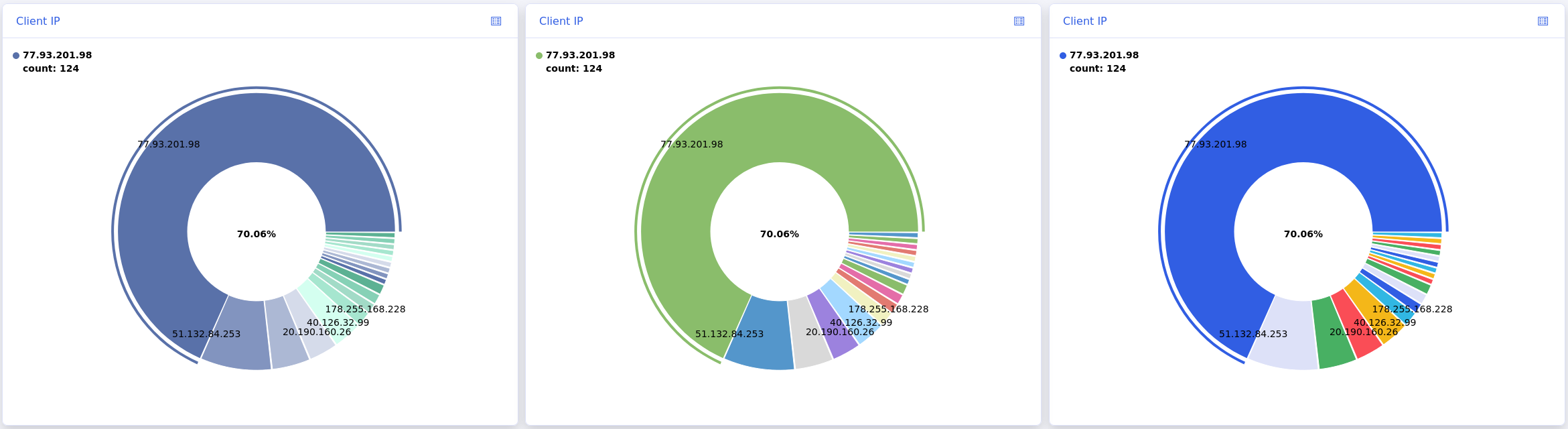

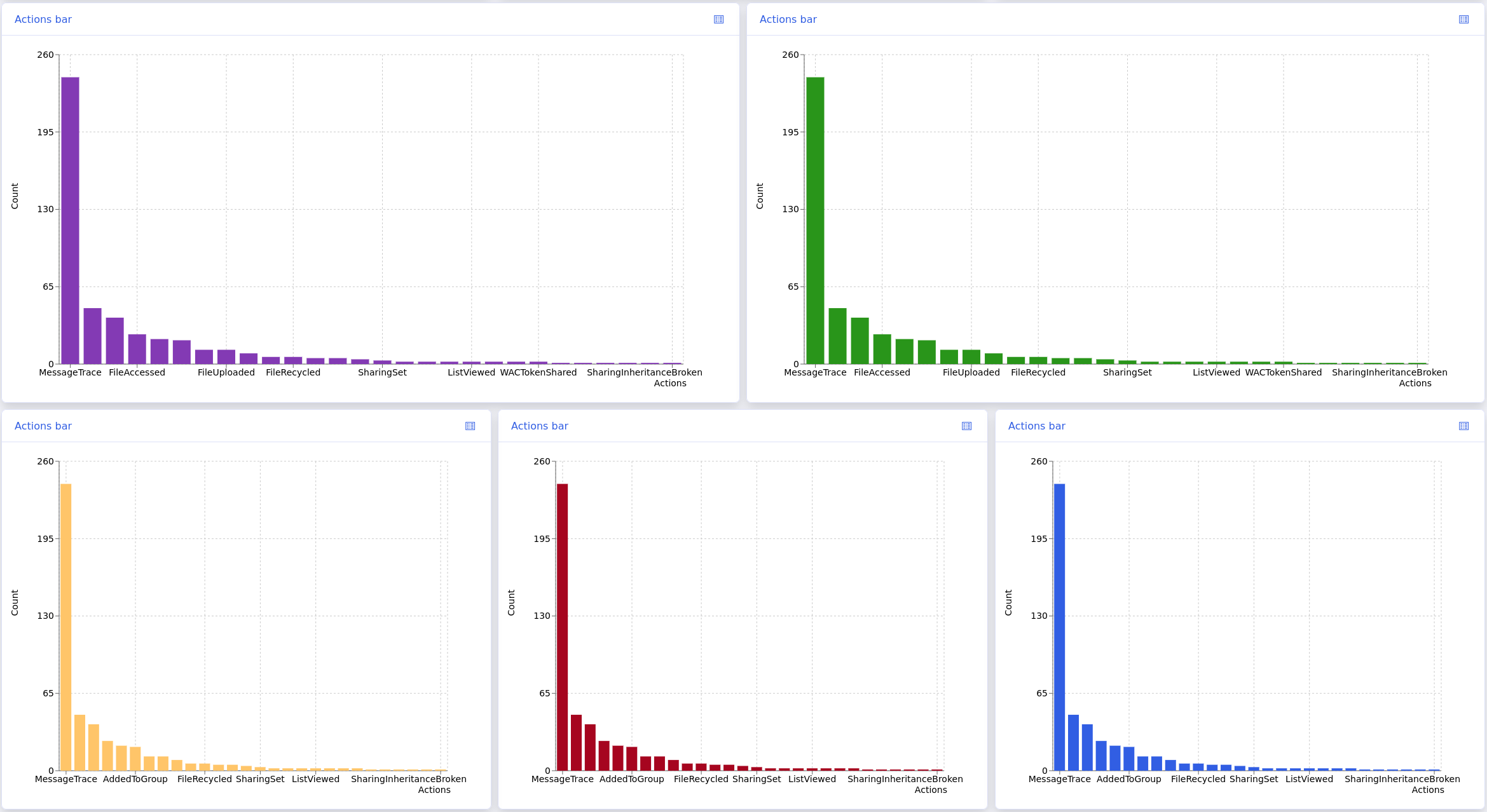

By default, pie chart and bar chart widgets use a blue color scheme. To change the color scheme, insert "color":"(color scheme)" directly before the layout lines.

- Blue: No extra lines necessary

- Purple:

"color":"sunset" - Yellow:

"color":"warning" - Red:

"color":"danger"

Troubleshooting JSON

If you get an error message about JSON formatting when trying to save the file:

- Follow the recommendation of the error message specifying what the JSON is "expecting" - it might mean that you're missing a required key-value pair, or the punctuation is incorrect.

- If you can't find the error, double-check that your formatting is consistent with other functional dashboards.

If your widget does not display correctly:

- Make sure the value of

datasourcematches in both the data source and widget sections. - Check for spelling errors or query structure issues in any fields referenced and in fields specified in the

matchphrasequery. - Check for any other typos or inconsistencies.

- Check that the log source you are referencing is connected.

Use these examples as guides. Click the icons to learn what each line means.

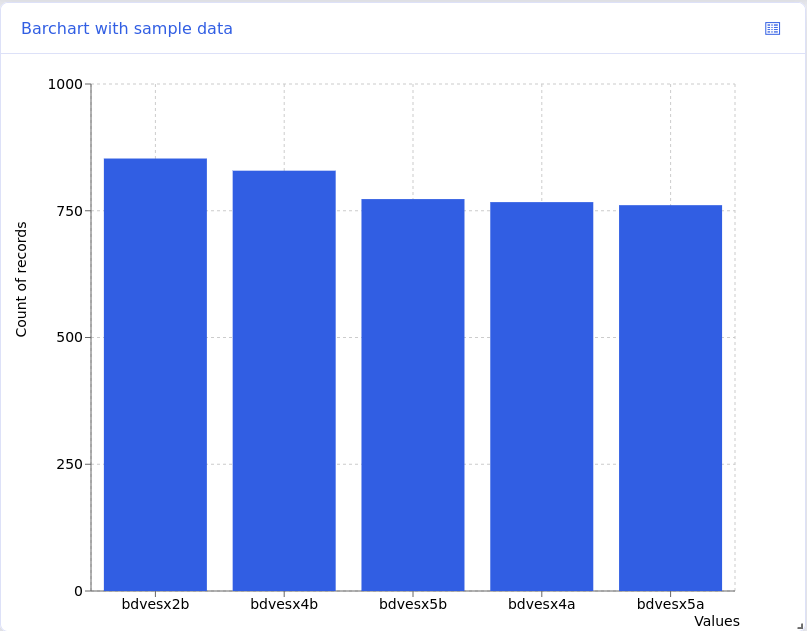

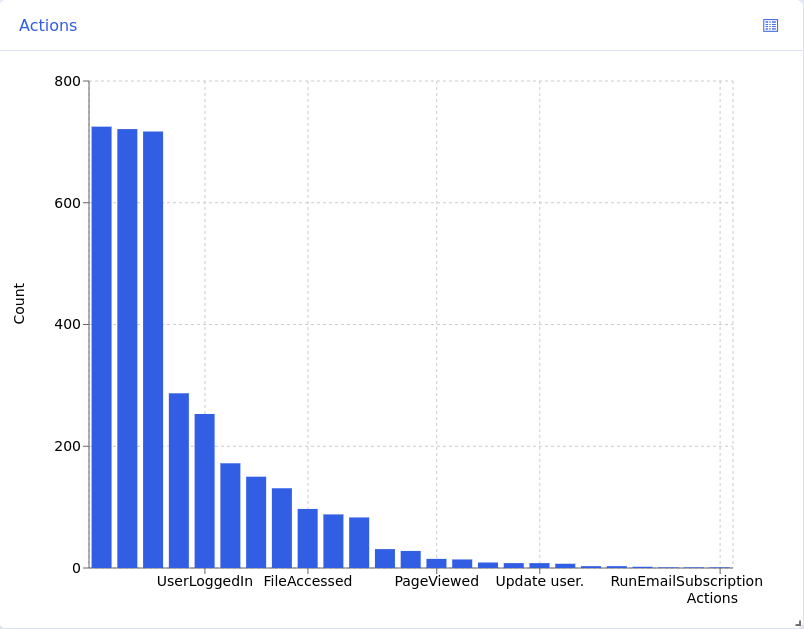

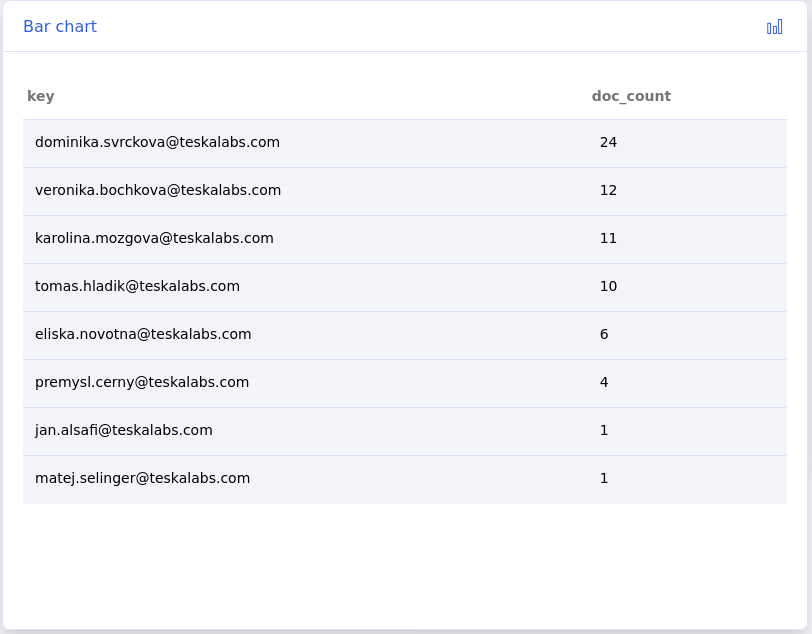

Bar charts¶

A bar chart displays values with vertical bars on an x and y-axis. The length of each bar is proportional to the data it represents.

Bar chart JSON example:

"datasource:office365-email-aggregated": { #(1)

"type": "elasticsearch", #(2)

"datetimeField": "@timestamp", #(3)

"specification": "lmio-{{ tenant }}-events*", #(4)

"aggregateResult": true, #(5)

"matchPhrase": "event.dataset:microsoft-office-365 AND event.action:MessageTrace" #(6)

},

"widget:office365-email-aggregated": { #(7)

"datasource": "datasource:office365-email-aggregated", #(8)

"title": "Sent and received emails", #(9)

"type": "BarChart", #(10)

"xaxis": "@timestamp", #(11)

"yaxis": "o365.message.status", #(12)

"ylabel": "Count", #(13)

"table": true, #(14)

"layout:w": 6, #(15)

"layout:h": 4,

"layout:x": 0,

"layout:y": 0,

"layout:moved": false,

"layout:static": true,

"layout:isResizable": false

},

datasourcemarks the beginning of the data source section as well as the name of the data source. The name doesn't affect the dashboard's function, but you need to refer to the name correctly in the widget section.- The type of data source. If you're using Elasticsearch, the value is

"elasticsearch" - Indicates which field in the logs is the date and time field. For example, in Elasticsearch logs, which are parsed by the Elastic Common Schema (ECS), the date and time field is

@timestamp. - Refers to the index from which to get data in Elasticsearch. The value